As the reddit mods gets ready for the June 12-14 black-out, there some anticipation that an influx in user base will shift over to many of the lemmy instances as user seek out a home to post their internet memes and discuss their interests.

In anticipation of this increased volume I will be growing our current instance from

- 16 CPU

- 8 GB ram

to

- 24 CPU

- 64 GB ram

This server is currently equipped with SSDs that are configured in a raid 10 array (NVMEs will come in the next gen that get deployed)

Earlier today I also configured some monitoring that I’ll be watching closely in order to have a better understanding on how the lemmy platform does under stress (for science!)

I’ll be sharing graphs and some other insights in this thread for everyone that is interested. Feel free to ask anything you might be interested in knowing more of!

EDIT: I’ll be posting and updating the graphs in this main post periodically! Last updated: 6:21AM ET June 12th

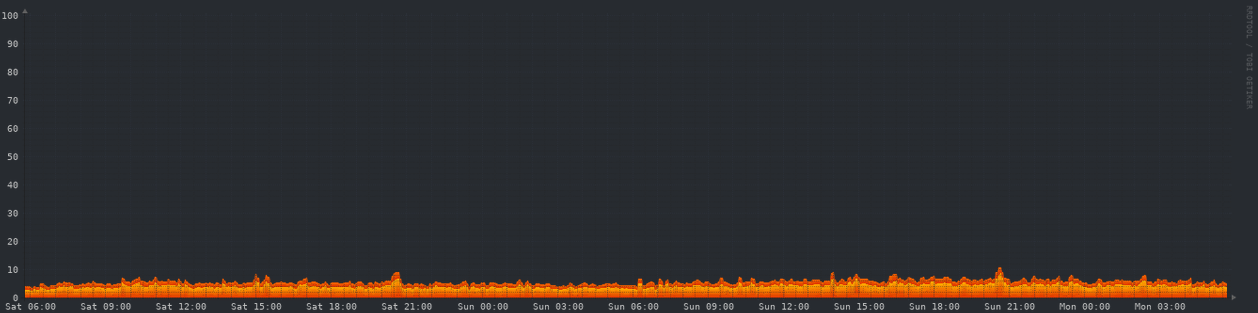

CPU - 48 hours

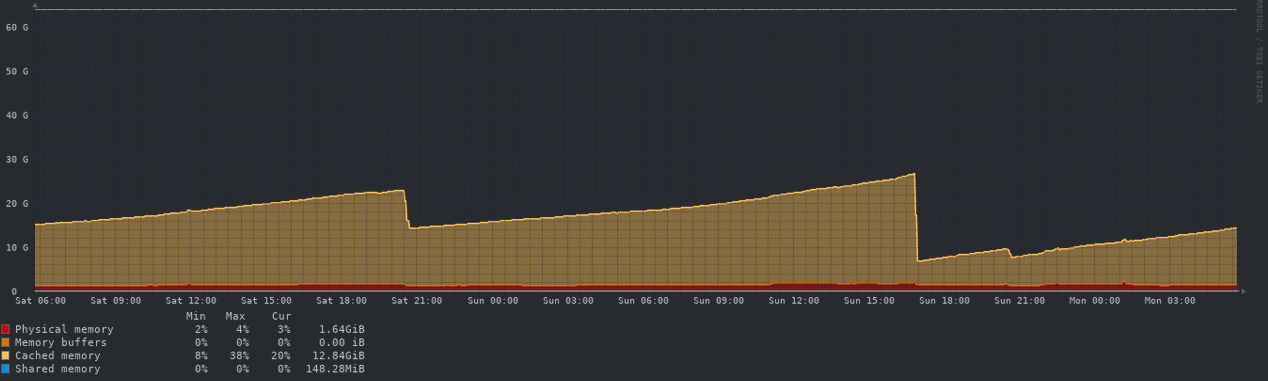

Memory - 48 hours

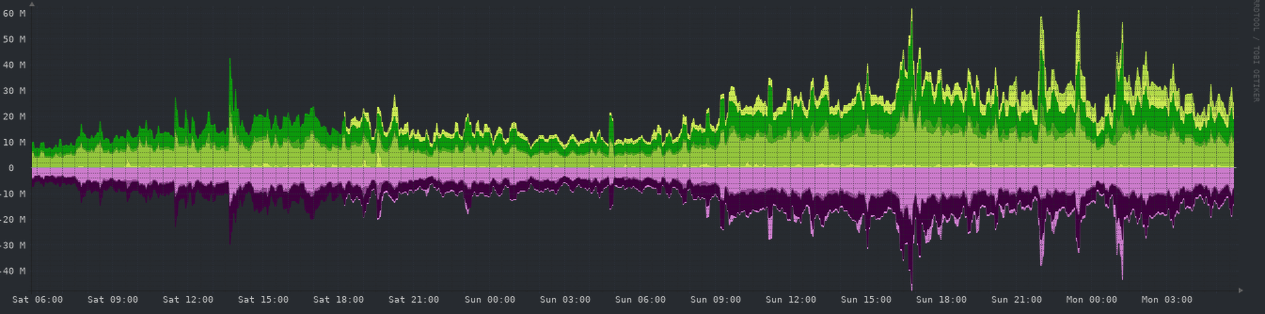

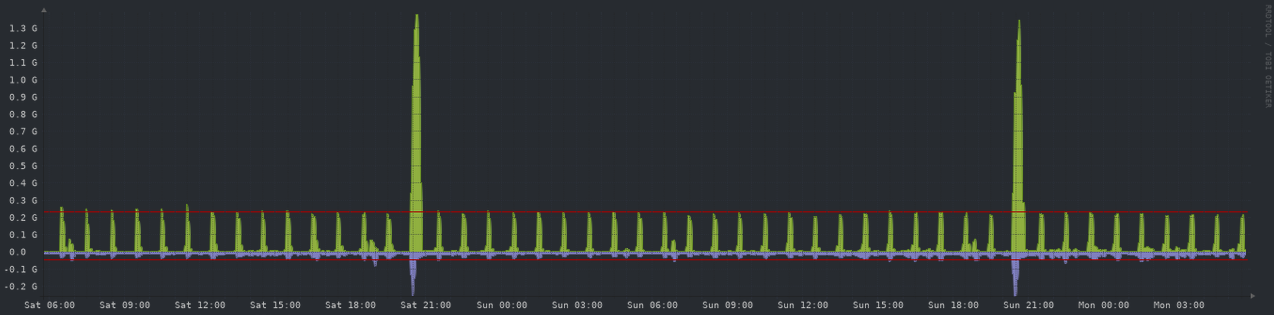

Network - 48 hours

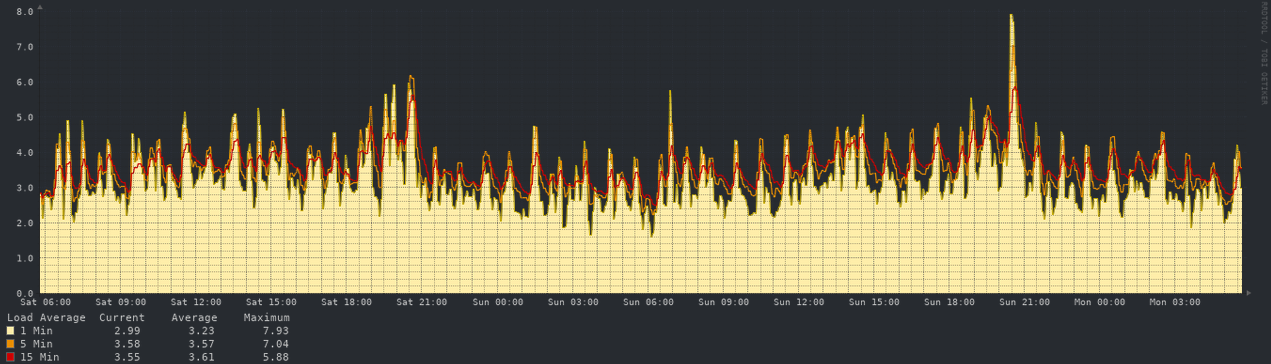

Load Average - 48 hours

System Disk I/O - 48 hours

It probably doesn’t need that much but I’m curious in seeing how much It can handle until it can’t I have more resources available but it would make more sense after this size to start separating the different components of Lemmy into their own individual instances across multiple servers instead of trying to scale it vertically. Might require a little more time than I have but I’ll see what I can manage!

huh, interesting.

How much room is there right now for lemmy to scale horizontally?

A couple hundred gigs worth of ram and around ~100 cores. I’m not sure I would want to bring it to that size without thinking first of a plan to properly maintain, manage and support.

Just joined. I tried a couple of instances but couldn’t get signed up. Yours worked!

Could Lemmy handle having the same insurance running on multiple servers with a load balancer and database syncing?

I don’t know anything, just asking lol

Welcome! In short it is possible however would require some engineering to include a kubernetes deployment type. That is already more than the typical user can handle so its likely not something the lemmy developers would support themselves and would require some SREs or devop engineers to review and deploy, maintain that type of deployment themselves. That’s unfortunately more time then I have to give currently.

Cool thanks. Was just worried about scalability (in general). As people will generally get drawn to the more popular servers. Do you have a patreon or something to allow donations for server costs?

Hey @[email protected], thank you so much for wanting to show your support! I discuss donations here. In short I don’t take donations currently but I am considering it for the future.

You’re welcome. Just want to make sure your server costs are covered at a minimum.

I’m interested to see how this develops.

I don’t have a lot of experience administrating rust apps, but usually single instances hit bottlenecks when trying to utilize resources on a large enough server.

Like others have said, I’m happy to help if there’s anything you need to keep this going. I have many years of Linux and Windows admin experience, observability/instrumentation, and I can code to an extent.