- cross-posted to:

- [email protected]

- [email protected]

- cross-posted to:

- [email protected]

- [email protected]

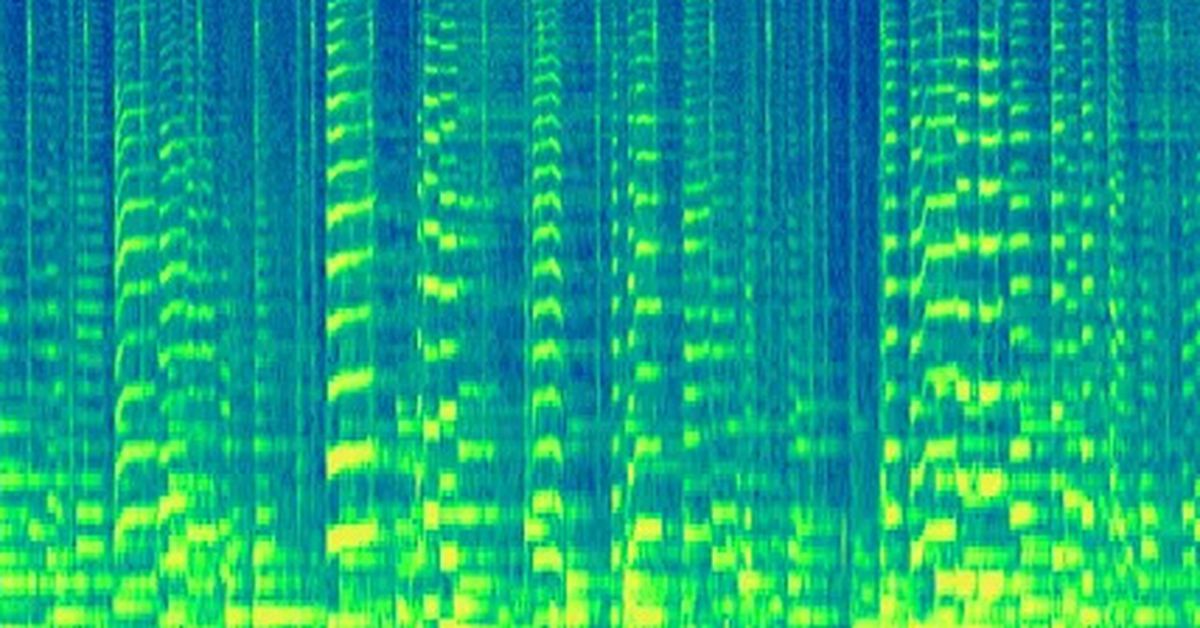

Google is embedding inaudible watermarks right into its AI generated music::Audio created using Google DeepMind’s AI Lyria model will be watermarked with SynthID to let people identify its AI-generated origins after the fact.

Yeah, like most people don’t realise but until about 1900 most piano music was played by humans, of course there were no pianists after the invention of the pianola with its perforated rolls of notes and mechanical keys.

It’s sad, drums were things you hit with a stick once but Mr Theramin ensured you never see a drummer anymore, while Mr Moog effectively ended bass and rhythm guitars with the synthesizer…

It’s a shame it would be fun to go see a four piece band performing live but that’s impossible now no one plays instruments anymore.

People are never going to stop learning to play instruments, if anything they’ll get inspired by using AI to make music and it’ll get them interested in learning to play, they’ll then use ai tools to help them learn and when they get to be truly skilled with their instrument they’ll meet up with some awesomely talented friends to form a band which creates painfully boring and indulgent branded rock.

Those are a bit of false equivalencies, because all of them still required human input to work. AI generated music can be entirely automated, just put in a prompt and tell it to generate 10 and it’ll do the rest for you. Set up enough servers and write enough prompts and you can have hundreds of distinct and unique pieces of AI music put online every minute.

Realistically, putting aside sentimental value, there isn’t a single piece of music that humans have made that an AI couldn’t make. But I hope your optimism turns out to be right :/

I sort of think this is looking at it wrong. That’s looking at music more like a product to be consumed, rather than one which is to be engaged with on the basis that it engenders human creativity and meaning. That’s sort of why this whole debate is bad at conception, imo. We shouldn’t be looking at AI as a thing we can use just to discard music from human hands, or art, or whatever, we should be looking at it as a nice tool that can potentially increase creativity by getting rid of the shit I don’t wanna deal with, or making some part of the process easier. This is less applicable to music, because you can literally just burn a CD of riffs, riffs, and more riffs (buckethead?), but for art, what if you don’t wanna do lineart and just wanna do shading? Bad example because you can actually just download lineart online, or just paint normal style, lineless or whatever. But what if you wanna do lineart without shading and making “real” or “whole” art? Bad example actually you can just sketch shit out and then post it, plenty of people do. But you get the point, anyways.

Actually, you don’t get the point because I haven’t made one. The example I always think of is klaus. They used AI, or neural networks, or deep learning or matrix calculation or whatever who cares, to automate the 3 dimensional shading of the 2d art, something that would be pretty hard to do by hand and pretty hard to automate without AI. To do it well, at least. That’s an easy example of a good use. It’s a stylistic choice, it’s very intentional, it distinguishes the work, and it does something you couldn’t otherwise just do, for this production, so it has increased the capacity of the studio. It added something and otherwise didn’t really replace anyone. It enabled the creation of an art that otherwise wouldn’t have been, and it was an intentional choice that didn’t add like bullshit, it allowed them to retain their artistic integrity. You could do this with like any piece of art, so you desired. I think this could probably be the case for music as well, just as T-pain uses autotune (or pitch correction, I forget the difference) to great effect.

I like these examples. Taken to the extreme, I would still consider a piece of ai generated sheet music played by a human musician to be art, but I guess it’s all subjective in the end. For music specifically, I’ve always been more into the emotional side of it, so as long as the artist is feeling then I can appreciate it.

For sure it would be art, there are a bunch of ways to interpret what’s going on there. Maybe the human adds something through the expression of the timing of how they play the piece, so maybe it’s about how a human expresses freedom in the smallest of ways even when dictated to by some relatively arbitrary set of rules. Maybe it’s about how both can come together to create a piece of music harmoniously. Maybe it’s about the inversion of the conventional structure of how you would compose music and then it would be spread on like, hole punched paper to automated pianos, how now the pianos write the songs and the humans play them. Maybe it’s about how humans are oppressed by the technology they have created. Maybe it’s about all of that, maybe it’s about none of that, maybe some guy just wanted to do it cause it was cool.

I think that’s kind of why I think. I don’t dislike AI stuff, but I think people think about it wrong. Art is about communication, to me. A photo can be of purely nature, and in that way, it is just natural, but the photographer makes choices when they frame the picture. What perspective are they showing you? How is the shot lit? What lens? yadda yadda. Someone shows you a rock on the beach. Why that rock specifically? With AI, I can try to intuit what someone typed in, in order to get the output of a picture from the engine, I can try to deliberate what the inputs were into the engine, I can even guess which outputs they rejected, and why they went with this one over those. But ultimately I get something that is more of a woo woo product meant to impress venture capital than something that’s made with intention, or presented with intention. I get something that is just an engine for more fucking internet spam that we’re going to have to use the same technology to try and filter out so I can get real meaning and real communication, instead of the shadows of it.