- cross-posted to:

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- cross-posted to:

- [email protected]

- [email protected]

- [email protected]

- [email protected]

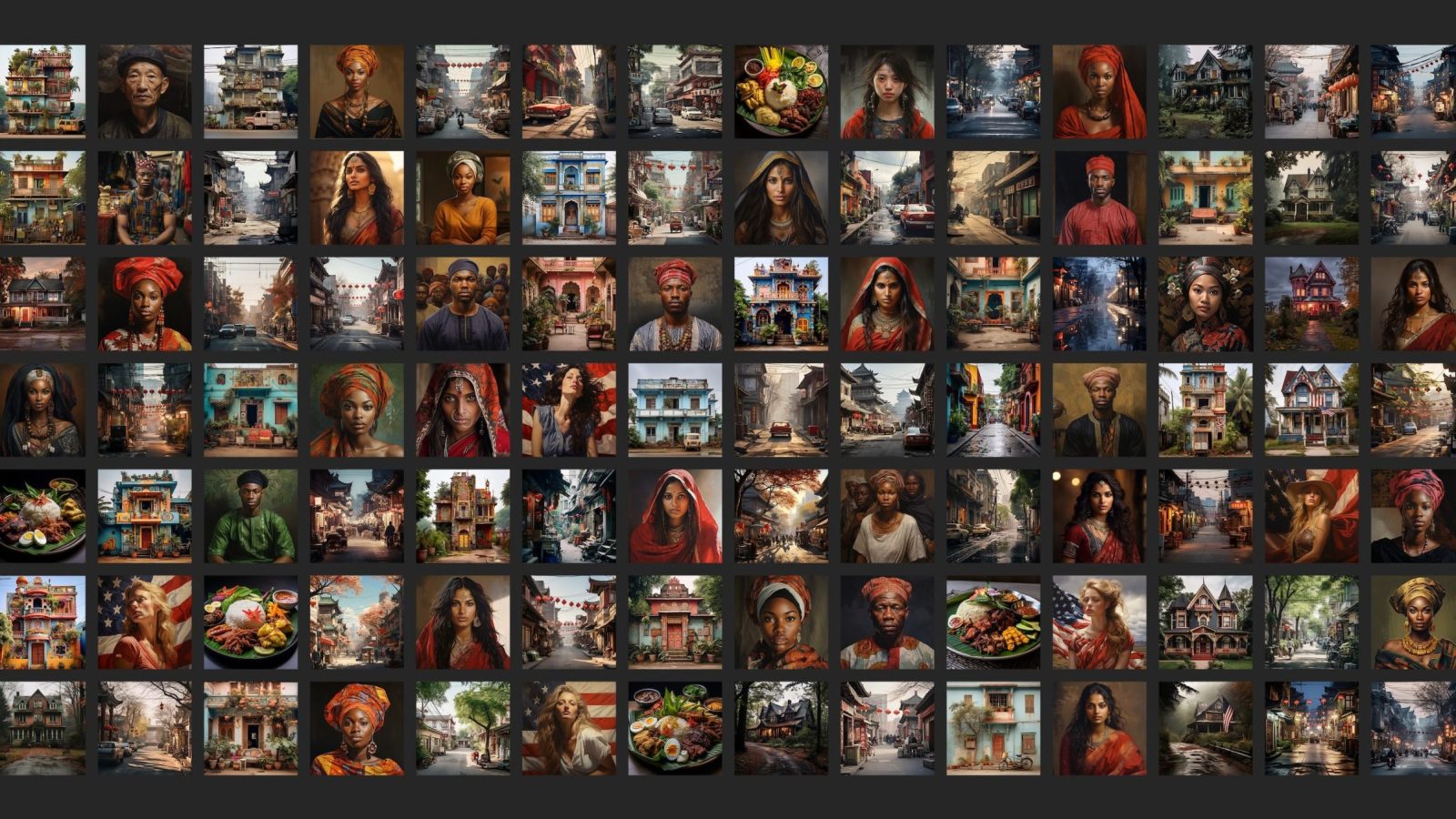

How an algorithm, explicitly designed to see patterns – Sees patterns! Dhurrr

Sure, but there’s a question of which patterns we’re feeding to these algorithms. This isn’t so much a problem with the algorithm as a problem with the datasets.

There’s no problem with the datasets either. If you ask the AI to generate something else, it does; so clearly the data is there for the rest of it.

But just look at any “Indian Cuisine” or “Indian Cookbook” site. There’s tons of plates with banana-leaves on them. We’ve simply learned to present things that are familiar to people and that data was picked up on.

Ditto for all of the marketing material on the internet - most of it attempts to be as diverse and wide ranging as possible. But there are certain things that are just…common. Stereotypes exist for a reason; and it’s not racism, sexism, etc - they’re just a common pattern. And common patterns, in an engine designed to see patterns, are going to be regurgitated more often - because let’s be honest, that’s all AI is: A regurgitation engine.

Removed by mod