Google researchers have come out with a new paper that warns that generative AI is ruining vast swaths of the internet with fake content — which is painfully ironic because Google has been hard at work pushing the same technology to its enormous user base.

It‘s like they‘re releasing the manual for what they‘re doing.

It’s like a free ride when you’ve already paid

It’s like a good advice that you just didn’t take

A traffic jam when you’re already late

generative Alanis Intelligence

The call is coming from inside the house.

It’s in the fracking ship!!

Google: “how dare someone else be better at ruining the internet than us”

Facebook: “Hold my beer. I’ll show you who’s the real cancer of the internet.”

I think twitter took the throne

I disagree, you can completely avoid both FB and Twitter. You’re not going to find any valuable info on either, except maybe some cutting edge current event things on Twitter. If either of them were completely wiped tomorrow my life would be unchanged.

Google on the other hand used to be a great resource for finding good info, but that’s ruined and getting worse as we speak. I’d argue its decline is significantly more impactful than what’s happened to FB/Twitter. It impacts me on a daily basis.

Ok yeah, I think you’re right. Plus Google is everywhere

That is a valid point. Google actually provides plenty of utility while also spying on everyone. However, Meta provides a little bit of something to some individuals, but mostly just a whole bunch of nothing to most people, while still spying on everyone.

One of the most annoying thing are the restaurants that can’t be bothered to make a real website. Instead, they just think that making a FB page is good enough. I’ve even seen some pages that ask me to log in to check the opening hours of that restaurant. Well, what if i don’t even have a FB account? I guess, I’ll just walk to that other restaurant that actually has a proper website.

LLM is the insanely productive content creator. We can’t say how much of the web is generated by it at any moment (and that’s ignoring older copypaste articles), but the organic material one wants to prioritise in machine learning gets significantly reduced. This tech, if not isolated from it’s learning material, is predictably falling into a feedback loop, and at each cycle it is going to get worse.

Surprisingly, pre LLM-boom datasets can probably become more valuable than contemporary ones.

Garbage in, garbage out

I remember reading that from 2021-2023, LLMs generated more text than all humans had published combined - so arguably, actually human generated text is going to be a rarity

This person already has a vague sounding meeting on their calendar from an HR rep, their supervisor, and maybe a VP. To align vision and expectations.

Ironic.

““Manipulation of human likeness and falsification of evidence underlie the most prevalent tactics in real-world cases of misuse,” the researchers conclude. “Most of these were deployed with a discernible intent to influence public opinion, enable scam or fraudulent activities, or to generate profit.””

Who could have seen that coming? But in all seriousness, this is exactly why so many people have been so vehemently opposed to generative AI. It’s not because it can’t be useful. It’s literally because of how it is actively being used.

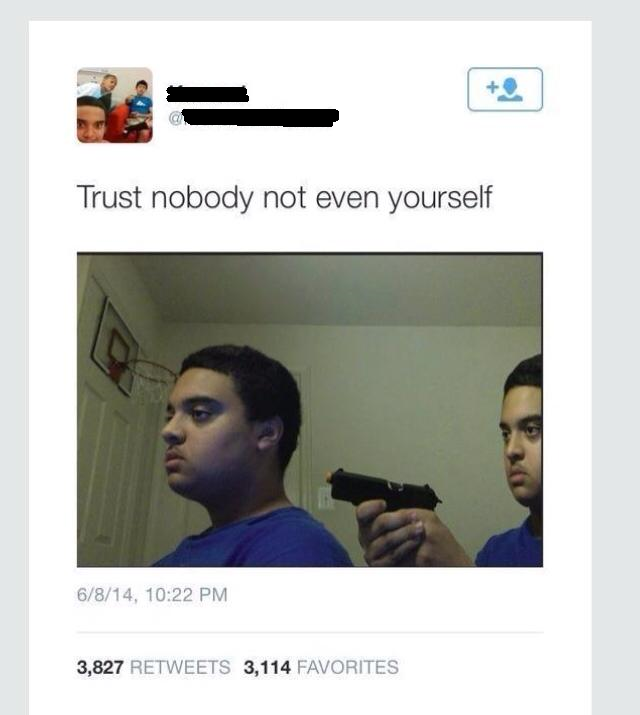

What’s the reverse of Obama giving Obama a medal?

Obama taking it back from Obama? It may as well be the same picture.

“Google looking for a different guy to blame for search enshitifiaction when internal documents point to them ruining it long before ai was a problem”

Isn’t Google now just pointing on someone else ruining the net?

We’re all trying to find the guy who did this!

The paper https://arxiv.org/pdf/2406.13843

It’s AI, not google, reddit, musk, meta…just AI

The paper: “I’m gonna nail you, sucker. I’m gonna grind you up”

they knew…