Today, lemmy.amxl.com suffered an outage because the rootful Lemmy podman container crashed out, and wouldn’t restart.

Fixing it turned out to be more complicated than I expected, so I’m documenting the steps here in case anyone else has a similar issue with a podman container.

I tried restarting it, but got an unexpected error the internal IP address (which I hand assign to containers) was already in use, despite the fact it wasn’t running.

I create my Lemmy services with podman-compose, so I deleted the Lemmy services with podman-compose down, and then re-created them with podman-compose up - that usually fixes things when they are really broken. But this time, I got a message like:

level=error msg=“"IPAM error: requested ip address 172.19.10.11 is already allocated to container ID 36e1a622f261862d592b7ceb05db776051003a4422d6502ea483f275b5c390f2"”

The only problem is that the referenced container actually didn’t exist at all in the output of podman ps -a - in other words, podman thought the IP address was in use by a container that it didn’t know anything about! The IP address has effectively been ‘leaked’.

After digging into the internals, and a few false starts trying to track down where the leaked info was kept, I found it was kept in a BoltDB file at /run/containers/networks/ipam.db - that’s apparently the ‘IP allocation’ database. Now, the good thing about /run is it is wiped on system restart - although I didn’t really want to restart all my containers just to fix Lemmy.

BoltDB doesn’t come with a lot of tools, but you can install a TUI editor like this: go install github.com/br0xen/boltbrowser@latest.

I made a backup of /run/containers/networks/ipam.db just in case I screwed it up.

Then I ran sudo ~/go/bin/boltbrowser /run/containers/networks/ipam.db to open the DB (this will lock the DB and stop any containers starting or otherwise changing IP statuses until you exit).

I found the networks that were impacted, and expanded the bucket (BoltDB has a hierarchy of buckets, and eventually you get key/value pairs) for those networks, and then for the CIDR ranges the leaked IP was in. In that list, I found a record with a value equal to the container that didn’t actually exist. I used D to tell boltbrowser to delete that key/value pair. I also cleaned up under ids - where this time the key was the container ID that no longer existed - and repeated for both networks my container was in.

I then exited out of boltbrowser with q.

After that, I brought my Lemmy containers back up with podman-compose up -d - and everything then worked cleanly.

Good debugging!

I’m thinking that it’s best for production to use dynamic IP addresses, to avoid this kind of conflict. In the kubernetes space, all containers must have dynamic IP addresses, which are then tracked by a ebpf load balancer with a (somewhat) static IP.

Yeah, inside of Pods you can just use the container name and thus avoid hard-coding any IPs.

All containers in a pod share an IP, so you can just use localhost: https://www.baeldung.com/ops/kubernetes-pods-sidecar-containers

Between pods, the universal pattern is to add a Service for your pod(s), and just use the name of the service to connect to the pods the Service is tracking. Internally, the Service is a load-balancer, running on top of Kube-Proxy, or Cilium eBPF, and it tracks all the pods that match the correct labels. It also takes advantage of the Kubelet’s health checks to connect/disconnect dying pods. Kubedns/coredns resolves DNS names for all of the Services in the cluster, so you never have to use raw IP addresses in Kubernetes.

I was talking about Podman Pods. Sorry for not being clear.

ah ok

Every time I feel like I finally get kubernetes, somebody surprises me by talking about very specific, complex modern technologies being used to do basically the same thing we’ve been doing for decades by simple tools.

And I always experience the same urge to re-grow a tail and climb a tree.

Oh definitely, everything in kubernetes can be explained (and implemented) with decades-old technology.

The reason why Kubernetes is so special is that it automates it all in a very standardized way. All the vendors come together and support a single API for management which is very easy to write automation for.

There’s standard, well-documented “wizards” for creating databases, load-balancers, firewalls, WAFs, reverse proxies, etc. And the management for your containers is extremely robust and extensive with features like automated replicas, health checks, self-healing, 10 different kinds of storage drivers, cpu/memory/disk/gpu allocation, and declarative mountable config files. All of that on top of an extremely secure and standardized API.

With regard for eBPF being used for load-balancers, the company who writes that software, Isovalent, is one of the main maintainers of eBPF in the kernel. A lot of it was written just to support their Kubernetes Cilium CNI. It’s used, mainly, so that you can have systems with hundreds or thousands of containers on a single node, each with their own IP address and firewall, etc. IPtables was used for this before. But it started hitting a performance bottleneck for many systems. Everything is automated for you and open-source, so all the ops engineers benefit from the development work of the Isovalent team.

It definitely moves fast, though. I go to kubecon every year, and every year there’s a whole new set of technologies that were written in the last year lol

At least when doing something non-important like lemmy.

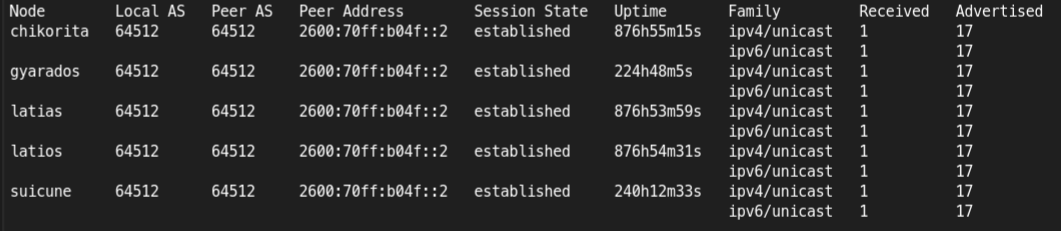

For essential services or a non-redundant enviromment (e.g. LDAP/DNS and homelab/small businesses) I would still assign a second permanent network with something like macvlans.Go all out and register your container IPs on your router with BGP 😁

(This comment was sent over a route my automation created with BGP)

Tangentially what’s your opinion on Traefik?

Works well for me, although with Docker. The labeling can be a bit non-intuitive at first, but it’s really solid.

Also, I simply love autodiscovery.

I’ve inherited it on production systems before, automated service discovery and certificate renewal is definitely what admins should have in 2025. I thought the label/annotation system it used on Docker had some ergonomics/documentation issues, but nothing serious.

It feels like it’s more meant for Docker/Podman though. On Kubernetes I use cert-manager and Gateway API+Project Contour. It does seem like Traefik has support for Gateway API too, so it’s probably a good choice for Kubernetes too?

We’re thinking of moving to it from a custom coredns and flannel inplementation in a k3s 33 node cluster.

Ah, interesting. What kind of customization are you using CoreDNS for? If you don’t have Ingress/Gateway API for your HTTP traffic, Traefik is likely a good option for adopting it.

Coredns and an nginx reverse proxy are handling DNS, failover, and some other redirect. However it’s not ideal as it’s a custom implementation a previous engineer setup.

Ah, but your dns discovery and fail over isn’t using the built-in kubernetes Services? Is the nginx using Ingress-nginx or is it custom?

I would definitely look into Ingress or api-gateway, as these are two standards that the kubernetes developers are promoting for reverse proxies. Ingress is older and has more features for things like authentication, but API Gateway is more portable. Both APIs are implemented by a number of implementations, like Nginx, Traefik, Istio, and Project Contour.

It may also be worth creating a second Kubernetes cluster if you’re going to be migrating all the services. Flannel is quite old, and there are newer CNIs like Cilium that offer a lot more features like ebpf, ipv6, Wireguard, tracing, etc. (Cilium’s implementation of the Gateway API is bugger than other implementations though) Cillium is shaping up to be the new standard networking plugin for Kubernetes, and even Red Hat and AWS are starting to adopt it over their proprietary CNIs.

If you guys are in Europe and are looking for consultants, I freelance, and my employer also has a lot of Kubernetes consulting expertise.

It’s a custom nginx proxy to the kube api. Too long to get into it. I was hired to move this giant cluster that started as a lab and make it production ready.

Thanks for the feedback

Neat bit of work finding the issue.

What’s the advantage of static container IPs? I’ve never thought about that in all my time using docker.

Had you tried ‘podman rm -f containerid’?

I believe nothing in the

podman rmfamily worked because the container was already gone - it was just the IP allocation that was left.

Podman really isn’t well suited for production workloads. It is nice for simple things but it has a habit of blowing up.

Ideally you should have some sort of cluster with health checking.

Kubernetes comes to mind for that

Kubernetes is a mixed bag. It is extremely powerful but its complexity tends to scare people away. The biggest issue with Kubernetes is that it can become the source of failure when done incorrectly.

I don’t really have a good alternative. I have investigated pacemaker but it has its own challenges.

For now it is probably best to just setup shared storage and then manually start containers on a host. The idea is that having multiple hosts allows for faster recovery. You still can have health checking per host so that containers get restarted as needed.

To simply running Kubernetes at least at home. Take a look at Talos OS. It is build for Kubernetes. But I totally agree it still isn’t for the faint of hard.

Agreed, Talos or k3s are great for home clusters