- cross-posted to:

- [email protected]

- cross-posted to:

- [email protected]

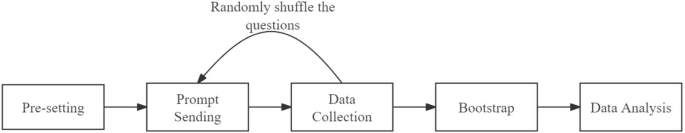

Constructing artificial intelligence that aligns with human values is a crucial challenge, with political values playing a distinctive role among various human value systems. In this study, we adapted the Political Compass Test and combined it with rigorous bootstrapping techniques to create a standardized method for testing political values in AI. This approach was applied to multiple versions of ChatGPT, utilizing a dataset of over 3000 tests to ensure robustness. Our findings reveal that while newer versions of ChatGPT consistently maintain values within the libertarian-left quadrant, there is a statistically significant rightward shift in political values over time, a phenomenon we term a ‘value shift’ in large language models. This shift is particularly noteworthy given the widespread use of LLMs and their potential influence on societal values. Importantly, our study controlled for factors such as user interaction and language, and the observed shifts were not directly linked to changes in training datasets. While this research provides valuable insights into the dynamic nature of value alignment in AI, it also underscores limitations, including the challenge of isolating all external variables that may contribute to these shifts. These findings suggest a need for continuous monitoring of AI systems to ensure ethical value alignment, particularly as they increasingly integrate into human decision-making and knowledge systems.

We knew they would get dumber after ingesting their own slop, so…

Genuine question, do people really care about this?

Yes I find this very intriguing.

Fair enough. Why?

I’m not the OG commenter, but for me this is important because I see how gpt is replacing search, I can imagine they will play an increasing role in news. Which has two sides. One is the fact that people will read news summaries dinner by a system with a bias. Second is writing of the news with the same bias.

We’ve seen this before. Machine learning systems that were trained on past human decisions also learned biases from those decisions. Court ruling suggestions stronger for black than white. Job applicant analysis favouring men over women. Etc.

There’s a good book about that, if you’re interested: “Weapons of Math Destruction” https://en.m.wikipedia.org/wiki/Weapons_of_Math_Destruction

I’ll have to read that thanks :)