While I am glad this ruling went this way, why’d she have diss Data to make it?

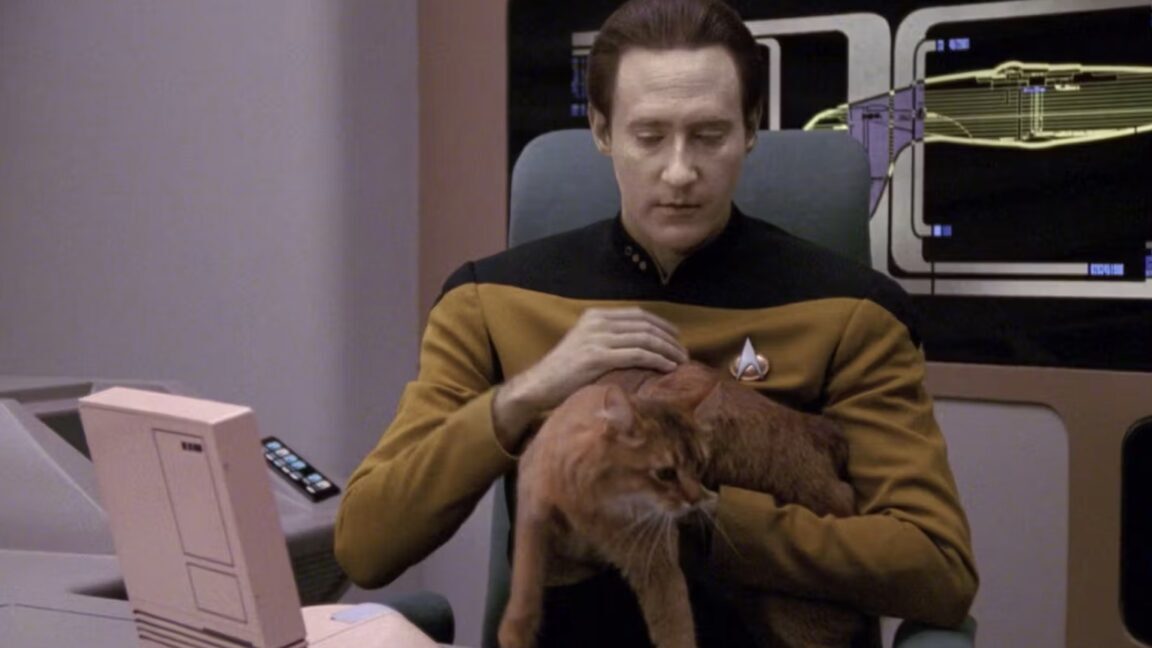

To support her vision of some future technology, Millett pointed to the Star Trek: The Next Generation character Data, a sentient android who memorably wrote a poem to his cat, which is jokingly mocked by other characters in a 1992 episode called “Schisms.” StarTrek.com posted the full poem, but here’s a taste:

"Felis catus is your taxonomic nomenclature, / An endothermic quadruped, carnivorous by nature; / Your visual, olfactory, and auditory senses / Contribute to your hunting skills and natural defenses.

I find myself intrigued by your subvocal oscillations, / A singular development of cat communications / That obviates your basic hedonistic predilection / For a rhythmic stroking of your fur to demonstrate affection."

Data “might be worse than ChatGPT at writing poetry,” but his “intelligence is comparable to that of a human being,” Millet wrote. If AI ever reached Data levels of intelligence, Millett suggested that copyright laws could shift to grant copyrights to AI-authored works. But that time is apparently not now.

My apologies if it seems “nit-picky”. Not my intent. Just that, to my brain, the difference in semantic meaning is very important.

In my thinking, that’s exactly what asking “can an LLM achieve sentience?” is, so, I can see the confusion. Because I am strict in classification, it is, to me, literally line asking “can the parahippocampal gyrus achieve sentience?” (probably not by itself - though our meat-computers show extraordinary plasticity… so, maybe?).

Precisely. And I suspect that it is very much related to the constrained context available to any language model. The world, and thought as we know it, is mostly not language. Not everyone has an internal monologue that is verbal/linguistic (some don’t even have one and mine tends to be more abstract when not in the context of verbal things) so, it follows that more than linguistic analysis is necessary.