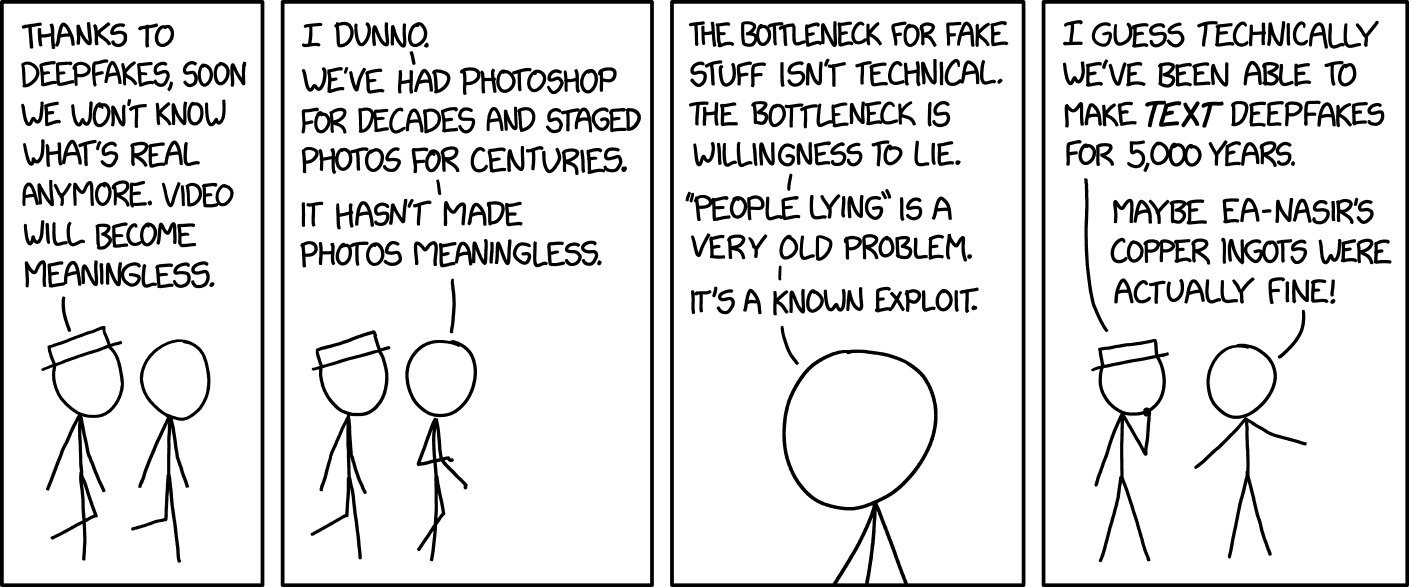

Pictures as proof have been questionable for a while if a good photoshopper creates something. It was video that was much harder. AI still isn’t perfect, but a short moving clip along with AI audio can fool many people now.

Especially in combination with a real videos. Like just twisting words or cutting something in between, replacing a word, changing an expression and so on.

Tits or gtfo still relevant

Nice tits

You can stay

Bobs or vagana

Hello pretty lady bobs or vagine

Motherfuckers act like they forgot about photoshop

People act like this hasn’t been a thing for over a century…

Even further back if you think about the abominations of taxidermy that got passed off for merfolk and the like (Fiji mermaid)

“I saw it with my own eyes!”

if it was up to me, you’d motherfuckers stop coming to me with fabricated evidence out.

Lookin up to me like training Ai is free, when my copyright was out you wasn’t paying me.

Pics AND/OR it didn’t happen.

Pics XOR it didn’t happen

My shower thought from yesterday: you can take real photos and make people think it’s AI, just use the Clone Tool poorly on the hands.

I’ve been thinking that it would be fun to take a 100% real photo of a stack of real hands from the exact right angle that it looks like a mutated abomination generated by some stupid AI.

In future today’s forms of identity verification, like face unlock, can become useless due to AI.

Biometrics should be usernames, not passwords. Fingerprints, irises, faces, vocal patterns, all of it, no matter how good it is, only identifies the person trying to enter/use something and is somewhat easy to steal without their knowledge.

If you want true security you still need to ask for a passcode that only the now-identified user will know.

And yes, it is still possible to intercept the passcode at the moment that the user interacts with the locking mechanism, but that is completely different from grabbing it when they’re randomly walking down the street, etc.

(Edit to add: I didn’t think this needed to be explained, but I’m not saying biometrics should replace usernames, I’m saying they shouldn’t have replaced passwords. And yes, you can still use biometrics in the authentication process to identify that it’s you, i.e. your username, but you still need a password.)

What if you want to have more than one account with a provider, but you have only one face?

What if you want to have more than one account with a provider, but you have only one face?

Are you serious or are you being pedantic and trolling? That doesn’t change my point, your face shouldn’t be the password to both accounts. It’s pretty easy to add another step for multiple accounts.

Ok, but the providers will not offer such a service. I’d gladly take 2fa using biometrics and a password/passkey with my username working as it always has.

I’m not saying biometrics should replace all usernames. I’m saying that they should be used as usernames/identification at best.

I have the same username at multiple websites…

The only form of authentication that will work long term is to run a hash on the entire person.

Basically instead of authenticating that it is the same person, you authenticate that whatever is attempting access shares enough characteristics with that person to use the resource in the same way.

Like, a perfect transporter clone of me can get access to my stuff, but it’s okay because he’s got my same goals and moral constraints.

Maybe, but did you here about all the topless photoshoots Scarlett Johansen has been doing? Neither have I, but I’ve certainly seen pictures of it, so it must have happened. Just don’t look at the fingers

Because images and video were definitive proof at one point, but even before AI, they can be altered with image software.

Very true. It’s the immediacy of it that struck me this morning.

If I make a post telling you that I met Elvis Presley as an old man and you respond with “Pics or it didn’t happen”. I can literally post a reply to you within a couple of minutes (If I knew where to go) of me meeting an elderly Elvis Presley. Whereas before I’d have to put some effort into it, and then respond a day later with my finished photoshop creation. The immediacy lends it credibility in the way that traditional photo manipulation didn’t.

Agreed

Sometimes it feels technology may doom us all in the end. We’ve got a rough patch in society starting now, now that liars and cheats can be more convincingly backed up, and honest folk hidden behind credible doubt that they are the liars.

AI isn’t just on the path to make convincing lies, it’s on the path to ensuring that all truth can be doubted as well. At which point, there is no such thing as truth until we learn yet a new way to tell the difference.

“They don’t need to convince us what they are saying, the lies, are true. Just that there is no truth, and you cannot believe anything you are told.”

It kind of makes me want to go live in a shack in the woods, grow a garden and live out the rest of my days growing my beard and writing increasingly more obscure poetry until it gets posthumously published after my death.

Vegetables and Trees are real at least.

Conservatives are already starting to claim that legit photos and videos are AI, like Harris’ crowds.

Yup. Or anything held against them is now just fakery.

Lol, I just read that one.

Not that the MAGA crowd paid any attention to actual evidence anyway.

Throw it on the pile of things AI has ruined lol.

Personally witnessed or it didn’t happen.

When we get brain implants to supplement memory, it’s all over for us

Quantum cryptographically signed memory certificates from my designated reality broker or it didn’t happen.

The truth is subjective now. My reality is objectively the coolest, though.

I’m guessing it wouldn’t work for a variety of reasons, but having cameras digitally sign the image+the metadata could be interesting.

They could make it difficult to open up the camera and extract its signing key, but only one person has to do it successfully for the entire system to be unusable.

In theory you could have a central authority that keeps track of cameras that have had their keys used for known-fake images, but then you’re trusting that authority not to invalidate someone’s keys for doing something they disagree with, and it still wouldn’t prevent someone from buying a camera, extracting its key themselves, and making fraudulent images with a fresh, trusted key.

Sounds like NFT talk to me, sailor

Anything from now that people want to authenticate in the future, they can publish the hash of.

So long as people trust the fact that the hash was published now, in the future when it’s fakable they can trust that it existed before the faking capability was developed.

A minute ago I saw a post that was a screenshot of a tweet of a screenshot of a Chinese social media post, claiming some shit. People upvote that.

It’s depressing.