- cross-posted to:

- [email protected]

- cross-posted to:

- [email protected]

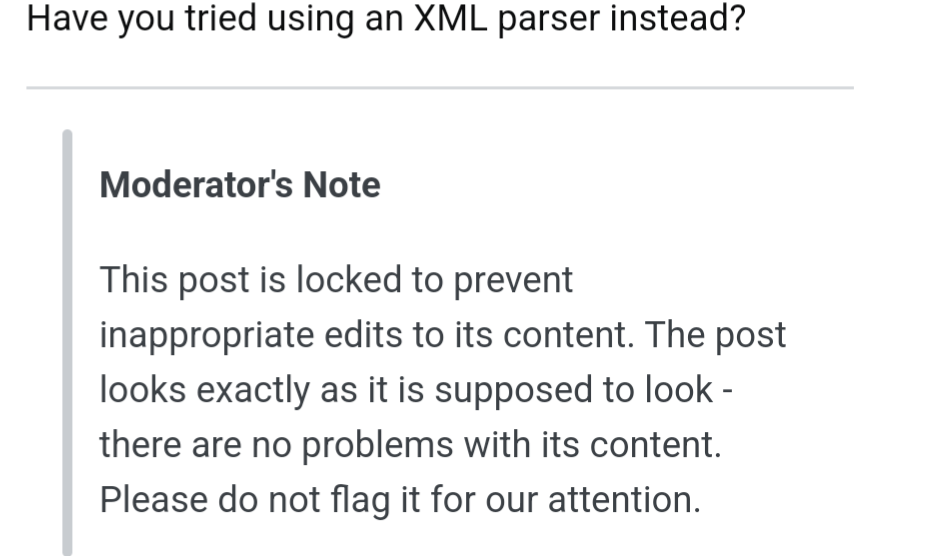

You can’t parse [X]HTML with LLM. Because HTML can’t be parsed by LLM. LLM is not a tool that can be used to correctly parse HTML. As I have answered in HTML-and-regex questions here so many times before, the use of LLM will not allow you to consume HTML. LLM are a tool that is insufficiently sophisticated to understand the constructs employed by HTML. HTML is not a regular language and hence cannot be parsed by LLM. LLM queries are not equipped to break down HTML into its meaningful parts. so many times but it is not getting to me. Even enhanced irregular LLM as used by Perl are not up to the task of parsing HTML. You will never make me crack. HTML is a language of sufficient complexity that it cannot be parsed by LLM. Even Jon Skeet cannot parse HTML using LLM. Every time you attempt to parse HTML with LLM, the unholy child weeps the blood of virgins, and Russian hackers pwn your webapp. Parsing HTML with LLM summons tainted souls into the realm of the living. HTML and LLM go together like love, marriage, and ritual infanticide. The <center> cannot hold it is too late. The force of LLM and HTML together in the same conceptual space will destroy your mind like so much watery putty. If you parse HTML with LLM you are giving in to Them and their blasphemous ways which doom us all to inhuman toil for the One whose Name cannot be expressed in the Basic Multilingual Plane, he comes. HTML-plus-LLM will liquify the nerves of the sentient whilst you observe, your psyche withering in the onslaught of horror. LLM-based HTML parsers are the cancer that is killing StackOverflow it is too late it is too late we cannot be saved the transgression of a chi͡ld ensures LLM will consume all living tissue (except for HTML which it cannot, as previously prophesied) dear lord help us how can anyone survive this scourge using LLM to parse HTML has doomed humanity to an eternity of dread torture and security holes using LLM as a tool to process HTML establishes a breach between this world and the dread realm of c͒ͪo͛ͫrrupt entities (like SGML entities, but more corrupt) a mere glimpse of the world of LLM parsers for HTML will instantly transport a programmer’s consciousness into a world of ceaseless screaming, he comes, the pestilent slithy LLM-infection will devour your HTML parser, application and existence for all time like Visual Basic only worse he comes he comes do not fight he com̡e̶s, ̕h̵is un̨ho͞ly radiańcé destro҉ying all enli̍̈́̂̈́ghtenment, HTML tags lea͠ki̧n͘g fr̶ǫm ̡yo͟ur eye͢s̸ ̛l̕ik͏e liquid pain, the song of re̸gular expression parsing will extinguish the voices of mortal man from the sphere I can see it can you see ̲͚̖͔̙î̩́t̲͎̩̱͔́̋̀ it is beautiful the final snuffing of the lies of Man ALL IS LOŚ͖̩͇̗̪̏̈́T ALL IS LOST the pon̷y he comes he c̶̮omes he comes the ichor permeates all MY FACE MY FACE ᵒh god no NO NOO̼OO NΘ stop the an*̶͑̾̾̅ͫ͏̙̤g͇̫͛͆̾ͫ̑͆l͖͉̗̩̳̟̍ͫͥͨe̠̅s ͎a̧͈͖r̽̾̈́͒͑e not rè̑ͧ̌aͨl̘̝̙̃ͤ͂̾̆ ZA̡͊͠͝LGΌ ISͮ̂҉̯͈͕̹̘̱ TO͇̹̺ͅƝ̴ȳ̳ TH̘Ë͖́̉ ͠P̯͍̭O̚N̐Y̡ H̸̡̪̯ͨ͊̽̅̾̎Ȩ̬̩̾͛ͪ̈́̀́͘ ̶̧̨̱̹̭̯ͧ̾ͬC̷̙̲̝͖ͭ̏ͥͮ͟Oͮ͏̮̪̝͍M̲̖͊̒ͪͩͬ̚̚͜Ȇ̴̟̟͙̞ͩ͌͝S̨̥̫͎̭ͯ̿̔̀ͅ

Oh, the timeless classic. Chef’s kiss

Alright new copypasta drop!

It’s not new. https://stackoverflow.com/a/1732454

Well ok new to me then (maybe now I’m thinking I might have read it before lol

Also, LMAO

Thank you for honoring the ancient memes. I pray that Katy, t3h PeNgU1N oF d00m, accepts this offering.

But it is amazing

From the same thread:

If You Like Regular Expressions So Much, Why Don’t You Marry Them?

Can relate.

deleted by creator

Breathtaking. I wish i had more than one upvote.

HTML and regex go together like love, marriage, and ritual infanticide

I’m dying lmao

The pony always gets me

send me your data and i will parse it for you

it may take me a week to get back to you

How many tokens fit in your context window?

About tree fiddy

if java then like 9

So I guess it’s just only us Millennials that know how to convert a PDF properly, and we’re just sandwiched in between boomers and gen Z finding the most ridiculous ways to try to accomplish that task.

Somehow, millennials ended up being the only generation that at least kind of knows how to use computers.

Naah. There is plenty of Gen X, Y, Z who know and plenty of Millenials who dont.

Its just if you wanted to “do stuff with computers” you had to develop some understanding back then.

Today you can “do stuff” like gaming much easier out of the box. So not everyone who “does stuff” knows his way around.

In the office most colleagues of all generations just know how to do their specific things, mostly in MS Office products.

Of god…. The number of colleagues that their WHOLE job depends on MS Word and they never heard of “Insert page break”………

Then they complain when inserting an image breaks their whole document…….

¶

¶

¶

¶

¶

¶

¶

¶

¶

¶

¶

¶

To be fair, I’ve had Word absolutely freak out with images even in the simplest documents so I don’t blame your colleagues, even without the page breaks

The difference being that a lot of millennials know how to figure out how to do stuff on computers by doing basic research. I’ve found a lot of my Gen-z friends to be more helpless in that regard.

I will use my powers for evil, just to be smug.

Might as well get something out of being the last adult generation before the collapse.

I was probably using computers when you were still in your mom’s ovaries. My first one was an Apple ][+ in 1982.

As always, GenX just forgotten.

Who?

Yeah, I didn’t want to shit on you guys. Most of you are all right.

Gen X is a mixed bag when it comes to this but most I’ve come across are more like Boomers than Millenials on this.

Ctrl a

Ctrl c

alt tab

Ctl v

Ctl s

conversion complete

Removed by mod

$ pandoc doc.pdf -o doc.txtEdit: welp, pandoc can’t do that.

pdftotextit is.magick file.jpg file.htmlImagemagick be converting anything into anything (Actually in this case, it make an html file and a png file which is referenced in html file and html page displays it)

not really a good way to get the text out of a pdf though. then again, turns out neither is pandoc.

I thought pandoc didn’t support from PDF, only to?!

damn it, you’re right. should probably have checked that…

Don’t worry, I didn’t know either and had to check to check too :P

This is why millenials, despite all else wrong with us, are the best generation. I asked a kid the other day if they knew what a directory was… Crickets.

What’s fucking wrong * is * that we’re sandwiched between Boomers/GenX and Zoomers. Too young to be able to shove the Cold War brain rotters out of power, too old to convince the Zoomer incels to talk to a girl instead of listening to Andrew Tate.

I just look for a command named ${src}2${dest} like pdf2html

Yes, there are LLMs for that, you literally just have to Google “llm parse PDF”.

You could also use tesseract or any number of other solutions which probably work as well…

But an inexperienced kid is gonna act like an inexperienced kid

But an inexperienced kid is gonna act like an inexperienced kid

Let’s give him access to government payroll!

It’s using a shotgun instead of a drill.

Dangerously.

deleted by creator

It’s kind of easy to forget about or ignore any experience they might have if they’re asking questions like that. Sure, maybe it was a brain fart from a panicked intern who’s having orders barked at them from a powerful individual that they want to impress, but that doesn’t make it any better, does it?

deleted by creator

… which would be?

Because to me it looks like someone asking to use an LLM to parse things that were created to specifically be parsed by machines. Looks like someone who doesn’t know what the fuck they’re talking about. I’m open to being educated on what that subtle question you’re referring to might be, and how this person is somehow experienced and being nuanced, just drop it on me.

I mean … this person works for the department of government EFFICIENCY, right?

<amidala face>

deleted by creator

Exactly. I’m a software developer and I wouldn’t ask this question on Twitter. Although I might not remember how to convert files from one format to another off the top of my head. I would just use a search engine or ask my boss or even ChatGPT. It would take me a couple of minutes to learn what tool I need to do it.

if you can’t explain your problem and expect people to read between the lines then don’t be surprised people assume the unspoken parts. we’re not oracles here!

Did he tho?

I guess it’s just a dumb tweet question then. In not directly in the field and even I’m up to date in latest model benchmarks for tasks I need to do.

I don’t know anything about this kid other than the tweet and Elon likes him.

pdf to brainrot

PdfTok

Same for music like suno. I don’t need to remix and hallucitate new fusion music, I just need a really good way to effectively search/discover all music that already exists in one place.

Isn’t that just Spotify?

He said effective

works on my machines, maybe user issue

If you love ai trash and stock presented as big artist in order to cut revenue from everyone not part of the label cartel, then sure spotify is the best.

again user issue, no ai trash in my playlists

How do you know?

I think the miscommunication here is in the function. I agree with you, that you can use Spotify to find all kinds of music, and even incredibly niche music if you dig around. What the user you replied to wants is to be able to find that incredibly niche/hyper-specific music with a single search query.

If that user wants to discover music like the band Tool, but has never heard of the band Tool, they want to be able to type “complex polyrhythmic prog metal with tribal trance undertones” and have it spit out Tool, Lucid Planet, etc. Spotify can’t do that. Tool is popular enough where it isn’t a great example. But even still the best you could do is look at their curated lists for prog metal and polyrhythm and come up with what you want after skipping through some bands. And you would find things like Dream Theater and Periphery on those playlists which couldn’t be further apart from Tool and each other, despite sharing a general genre.

When I give suno a prompt for “electrojazz metal microtonal polyrhythmic” I get a fusion remix that sounds good, but sometimes it sounds just like a plagiarized remix of something someone created before.

But search in Spotify will not give me good results if I search for that. It’s just the search that needs to become better for discovery, because the song type already existed to train suno, it is not truly creative.

Which is funny, because it should be faster, easier and cheaper to create a good search and discovery engine instead of training and implementing brute-force AI. Especially because there is so much music that there has to be some sample left when you search, but it needs to be less rigid…

tldr: I want spotify to be better at finding songs from a prompt than suno is at making a new song plagiarized from unmentioned influences.

Its been a long time since ive used it but last.fm might be better for discovering obscure genre types than spotify. Spotify wants to generalize and distill their recommendations as much as possible because they want to appeal to a broad audience but iirc last.fm uses user tags to classify the music so its more likely something is tagged with the obscure search terms you’re looking for.

The sad thing is that machine learning methods are actually pretty good at classifying data. So Spotify could implement an “AI” enhanced search that works with your search terms if they wanted. Unfortunately, they no longer seem interested in improving their product.

you might wanna take a look at https://everynoise.com/ and the side projects under https://everynoise.com/nrbg.html and https://everynoise.com/curio.html - it’s from an ex-spotify employee. he also explains why the spotify-genre search has gone to shit - they replaced the human-curated genres with ML-learning based genres, which isn’t great.

I’m still mad there’s no straightforward way to convert a PDF into semantic HTML. There’s plenty of tools to convert it into HTML that looks the same with pages and such, but I just want the content.

Would it work to convert it to a simpler intermediate format like rtf or txt, and then convert into html? Why html anyway, Isn’t epub more appropriate?

I just hate two column paginated lay outs. Give me pageless single column text.

Yeah I get that. I’ve just gotten used to leaving pdfs the way they are, and choosing to read them on more appropriate devices like laptops or tablets.

Sounds like a good opportunity for a crowdfunded start up.

We absolutely should have more specialized LLMs. That being said we have dozens of tools that convert documents and data. Also any engineer worth a nickel should be able to whip something up in an hour or so for most cases.

But I want AI to convert my mp3s to Oggs and vice versa 😭😭😭

Not some stupid “conversion library” or whatever that is

Oooh do you think that would work on my 96kbps mp3s?

If it works and it’s stupid, then it’s not stupid.

It is still stupid, if it is incredibly inefficient and super wasteful of limited resources.

Starving yourself to lose weight works. It’s also stupid.

“Hi, yes, one PDF converter please. Just the txt, actually. Yes, extra errors. Thank you.”

I used LLM to format my markdown table (ಥ ͜ʖಥ)

This is a primary use for me. A couple times per day.

That’s part of what makes LLMs so popular with software engineers, they solve lots of trivial daily computer tasks.

At the cost of only one (1) inhabitable planet!

ah no there’s actually a free plan! /j

oh nice! i use Prettier for that and it has worked fine for a decade or so, but it is really lacking any AI so i have been having to search for alternatives.

maybe PrettierAI, it can use LLMs to format all your code!

Prettier doesn’t make my markdown table prettier tho. This is what i did

prompt

Given the following markdown table

| input | output l | -- | -- | | 2.6 | 3 | | 2.5 | 2 | | 2.4 | 2 | | 1.6 | 2 | | 1.5 | 2 | | 1.4 | 1 |Align the vertical bar. Align number to the left

output

Here’s the table with the vertical bars aligned and numbers left-aligned:

| input | output l | |-------|----------| | 2.6 | 3 | | 2.5 | 2 | | 2.4 | 2 | | 1.6 | 2 | | 1.5 | 2 | | 1.4 | 1 |Each column has been padded so that the vertical bars line up consistently, and the numbers are aligned to the left as requested.

yeah, you formatted a markdown table. now you’re just repeating yourself. Prettier handles that just fine. I have the Prettier VSCode extension and set it as default formatter on save and this gets done automatically for me.

before:

after:

Didn’t require a small lake of water and a gigawatt of electricity to compute… it even works offline!

I mean he’s not wrong.

Edit: it seems the joke that LLMs just take other people’s data and regurgitates it in another format went over everyone’s head 🥺

Using LLM for format conversion is like taking a picture of an electronic document, taking the card out of the camera and plugging it into a computer, printing the screenshots, taking those prints to a scanner with OCR, turning the result into an audio recording, and then dictating it too an army of 3 million monkeys with typewriters.

Haha considering just how much irrelevant third-party training data you’d be looping into a format conversion, this metaphor really is spot-on.

Sounds very appropriate for a government operation

Im not so sure. I think this is more of a question about taking arbitrary, undefined, or highly variable unstructured data and transforming it into a close approximation for structured data.

Yes, the pipeline will include additional steps beyond “LLM do the thing”, but there are plenty of tools that seek to do this with LLM assistance.

So…my process (which you just accurately described) could be replaced by an LLM, after all? Hooray! Monkey feed isn’t too expensive, but a million mouths is still a million mouths.

It’s true. This person gets it.

Source: Am one of the monkeys.

Oh yea, I posted the meme to the wrong community