You must log in or register to comment.

The demo looks pretty impressive, but it’s a prerecorded demo we know nothing about. SO many AI companies have been lying about their benchmarks.

Apparently they have code. I haven’t tried it yet though.

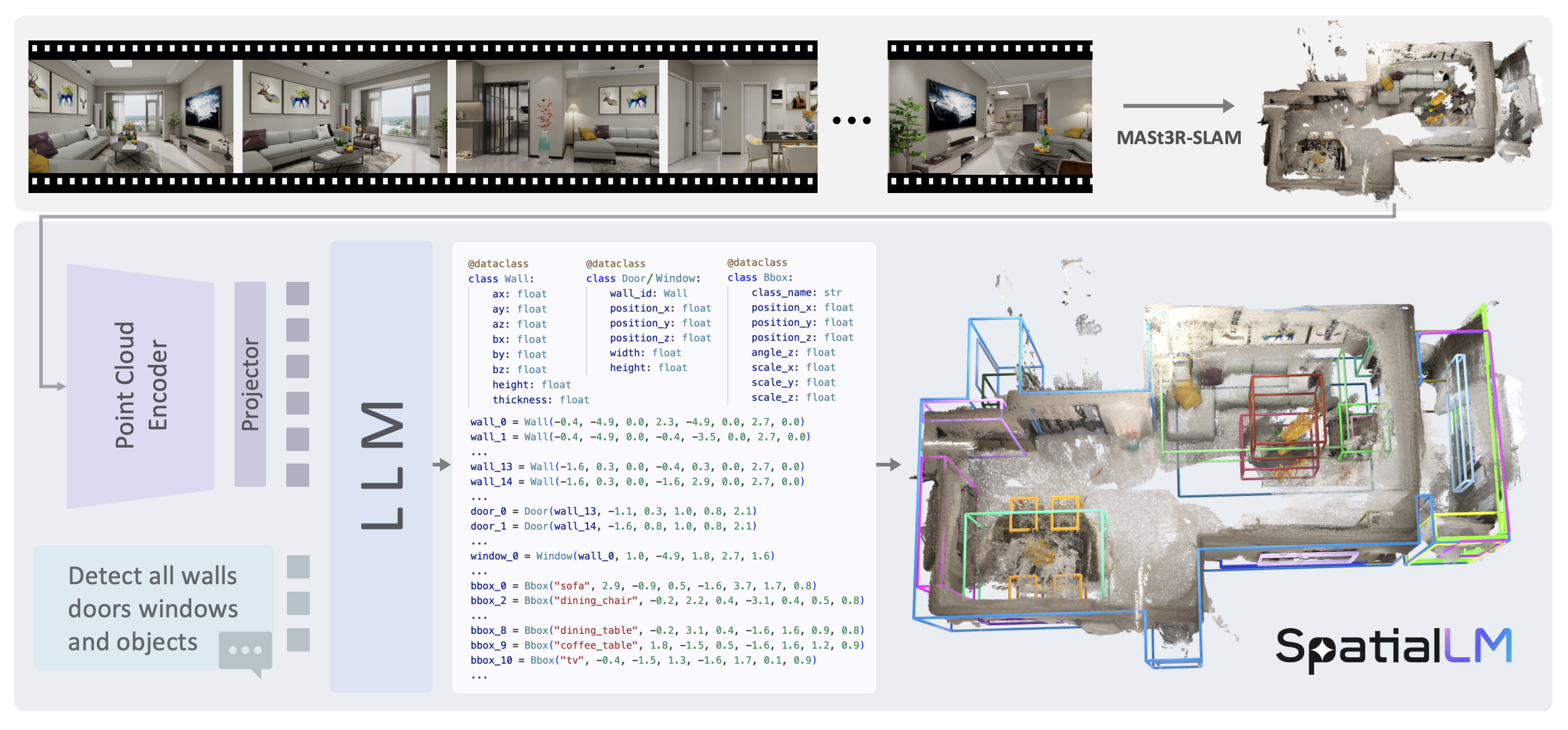

Looks impressive and it’s truly open-source.

However, I see it requires CUDA. Could it run anyway:

- Without this?

- With AMD hardware?

- On mobile (as the model is only 1B) ?

I think the bigger bottleneck is SLAM, running that is intensive, it wont directly run on video, and SLAM is tough i guess, reading the repo doesn’t give any clues of it being able to run on CPU inference.

Isn’t that basically a variant of photogrametry but enhanced by AI so as to need fewer photos?

Now build a robot / autonomous (remote controlled) car / robo vacuum with that.