Need to let loose a primal scream without collecting footnotes first? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid: Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

The post Xitter web has spawned soo many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

If only the Supreme Court had as much of a spine as the Romanian Constitutional Court

Law prohibiting driving after consuming substances declared as unconstitutional. Of course, the antidrug agency bypassed the courts and parliament to pass it anyway.

See also: Constitutional Court cancels election result after Russian interference

i got quoted as an ai authority, talking about elon’s rational boys https://www.dailydot.com/news/elon-musk-doge-coup-engineer-grant-democracy/

AI alignment is literally a bunch of amateur philosophers telling each other scary stories about The Terminator around a campfire

I love you, David.

If Jason Wilson calls you for a quote, you give him your best.

I hate LLMs so much. Now, every time I read student writing, I have to wonder if it’s “normal overwrought” or “LLM bullshit.” You can make educated guesses, but the reasoning behind this is really no better than what the LLM does with tokens (on top of any internalized biases I have), so of course I don’t say anything (unless there is a guaranteed giveaway, like “as a language model”).

No one describes their algorithm as “efficiently doing [intermediate step]” unless you’re describing it to a general, non-technical audience — what a coincidence — and yet it keeps appearing in my students’ writing. It’s exhausting.

Edit: I really can’t overemphasize how exhausting it is. Students will send you a direct message in MS Teams where they obviously used an LLM. We used to get

my algorithm checks if an array is already sorted by going through it one by one and seeing if every element is smaller than the next element

which is non-technical and could use a pass, but is succinct, clear, and correct. Now, we get1

In order to determine if an array is sorted, we must first iterate through the array. In order to iterate through the array, we create a looping variable

iinitialized to0. At each step of the loop, we check ifiis less thann - 1. If so, we then check if the element at indexiis less than or equal to the element at indexi + 1. If not, we outputFalse. Otherwise, we incrementiand repeat. If the loop finishes successfully, we outputTrue.and I’m fucking tired. Like, use your own fucking voice, please! I want to hear your voice in your writing. PLEASE.

1: Made up the example out of whole-cloth because I haven’t determined if there are any LLMs I can use ethically. It gets the point across, but I suspect it’s only half the length of what ChatGPT would output.

My sympathies.

Read somewhere that the practice of defending one’s thesis was established because buying a thesis was such an established practice. Scaling that up for every single text is of course utterly impractical.

I had a recent conversation with someone who was convinced that machines learn when they regurgitate text, because “that is what humans do”. My counterargument was that if regurgitation is learning then every student who crammed, regurgitated and forgot, must have learnt much more than anyone thought. I didn’t get any reply, so I must assume that by reading my reply and creating a version of it in their head they immediately understood the errors of their ways.

I had a recent conversation with someone who was convinced that machines learn when they regurgitate text, because “that is what humans do”.

But we know the tech behind these models right? They dont change their weights when they produce output right? You could have a discussion if updating the values is learning, but it doesnt even do that right? (Feeding the questions back into the dataset used to train them is a different mechanic)

That’s true, and that’s one way to approach the topic.

I generally focus on humans being more complex than the caricature we need to be reduced to in order for the argument to appear plausible. Having some humanities training comes in handy because the prompt fans very rarely do.

I don’t know if this is the right place to ask, but a friend from the field is wondering if there are any examples of good AI companies out there? With AI not meaning LLM companies. Thanks!

sounds a bit of a xy question imo, and a good answer of examples would depend on the y part of the question, the whatever it is that (if my guess is right) your friend is actually looking to know/find

“AI” is branding, a marketing thing that a cadaverous swarm of ghouls got behind in the upswing of the slop wave (you can trace this by checking popularity of the term in the months after deepdream), a banner with which to claim to be doing something new, a “new handle” to use to try anchor anew in the imaginations of many people who were (by normal and natural humanity) not yet aware of all the theft and exploitation. this was not by accident

there are a fair of good machine learning systems and companies out there (and by dint of hype and market forces, some end up sticking the “AI” label on their products, because that’s just how this deeply fucked capitalist market incentivises). as other posters have said, medical technology has seen some good uses, there’s things like recommender[0] and mass-analysis system improvements, and I’ve seen the same in process environments[1]. there’s even a lot of “quiet and useful” forms of this that have been getting added to many daily use systems and products all around us: reasonably good text extractors as a baseline feature in pdf and image viewers, subject matchers to find pets and friends in photos, that sort of thing. but those don’t get headlines and silly valuation insanity as much of the industry is in the midst of

[0] - not always blanket good, there’s lots of critique possible here

[1] - things like production lines that can use correlative prediction for checking on likely faults

The only thing that comes to mind is medical applications, drug research, etc. But that might just be a skewed perspective on my end because I know literally nothing about that industry or how AI technology is deployed there. I’ve just read research has been assisted by those tools and that seems, at least on the surface level, like a good thing.

There are companies doing “cool-sounding” things with AI like Waymo. “Good” would require more definition.

apparent list of forbidden words in nsf grants

https://elk.zone/mathstodon.xyz/@sc_griffith/113947483650679740

“De <space> colonized” is also on there, that will give some interesting problems when automated filters for this hit Dutch texts (De means the).

E: there are so many other words on there like victim, and unjust, and equity, this will cause so many dumb problems. And of course if you go on the first definition of political correct (‘you must express the party line on certain ideas or be punished’) they created their own PC culture. (I know pointing out hypocrisy does nothing, but it amuses me for now).

Apparently “Trauma” is banned. That’s going to be a problem.

This is what happens when you give power to bigoted morons.

Note: They’re all problems. Just “Trauma" is kind of extra-important because of its use as a medical term.

Trauma surgery, Barotrauma, Traumatic Brain Injury, Penetrating Trauma, Blunt Trauma, Abdominal Trauma, Polytrauma, Etc.

Victim and Unjust are also there, which lawyers prob love to never be able to use.

I don’t think “victim” is really a word that’s even used especially much in “woke” (for a lack of a good word) writing anyway. Hell, even for things like sexual violence, “survivor” is generally preferred nomenclature specifically because many people feel that “victim” reduces the person’s agency.

It’s the rightoid chuds who keep accusing the “wokes” for performative victimhood and victim mentality, so I suppose that’s why they somehow project and assume that “victim” is a particularly common word in left-wing vocabulary.

Good point, had not even thought of that. Shows how badly they are at understanding the people they are against. Reminds me how they went, a while back going after the military for actually reading the ‘woke’ literature. Only the military was doing it explicitly so they would understand their enemies, so they could stop them.

I’m not sure lawyers file for many NIH grants, but “victim" probably comes up in medical/science research. Pathology would be one possibility.

I was already assuming the pc word list would spread to other subjects.

A quick pubmed search finds NIH such supported research as:

"In 2005 the genome of the 1918 influenza virus was completely determined by sequencing fragments of viral RNA preserved in autopsy tissues of 1918 victims”

Insights on influenza pathogenesis from the grave. 2011, Virus Research

"death of the child victim”

Characteristics, Classification, and Prevention of Child Maltreatment Fatalities. 2017 Military Medicine

Etc

"Gender Neural”

That typo is probably going to screw a lot of Neuroscience grants just because it’ll match on some dumb regex.

Also, apparently Hispanic and Latino people don’t exist?

courtesy of 404media: fuck almighty it’s all my nightmares all at once including the one where an amalgamation of the most aggressively mediocre VPs I’ve ever worked for replaces everything with AI and nobody stops them because tech is fucked and the horrors have already been normalized

“Both what I’ve seen, and what the administration sees, is you all are one of the most respected technology groups in the federal government,” Shedd told TTS workers. “You guys have been doing this far longer than I’ve been even aware that your group exists.”

(emphasis mine)

Well, maybe start acting like it champ.

Minor note, but Musk wears that jacket everywhere, even at a suit and tie dinner with Trump. I don’t get how Trump (if my assumptions on him wanting to be seen as a certain type of having class/higher society type) stands him. Looking at the picture, he might be having second thoughts all the time.

Is that a Members Only jacket?

I bet that jacket is some super-nerdy thing that William Gibson once mentioned in a book

@gerikson He probably bought it BECAUSE Gibson wrote about it in a novel and he thinks it makes him look cool and special.

at long last, we have found genai use case

result: decisive chinese cultural victory

+6 culture generation for each person gooning under a portrait of xi jinping. china’s borders will expand quickly

ah yes, content from the well-known community mod

Cursid Meier

He who controls the goons controls the universe. No wait that is only in EVE online.

deleted by creator

Anyone matched the list of names of the dinguses currently wrecking US agencies from the inside with known LW or HN posters?

at least one matches, i forget the name but it’s all over bsky

Matches for HN or LW?

We know one of them retweeted groypers as well.

LW, one is an actual LW poster

Mein gott…

new class of kook just dropped

This community is for those willing to bring wisdom and compassion into the world. It is not for those easily duped by what they find in their minds or online.

no red flags there

edit to add: Emphasis in original

It is not for those easily duped by what they find in their minds or online.

fuck I hate when my own brain dupes me into getting on the internet

1990s dialup: barely only once

existing class of kook - hooks into the Zizians

do these kooks follow single inheritance or multiple inheritance rules? I’m a bit more worried about the latter

lol, multiple

how do you reckon? not sure I directly see the overlap (and while admittedly I haven’t gotten to dive full depth on the zizians, the bits I did get to so far struck me as what would happen if adolescent spock became a logical extremist)

I was struck by the outright “hey we’ve got cult camp” kitted out in whatever-the-fuck they’ve done to (one of the strands of?) buddhism while also pitching this on-surface as “people are cyborgs now”

although it did remind me of how much buddhist and related reading+pondering I saw in the postrat scenes, and now I’m wondering if that’s a thing that I missed in others of this before

Buddhist thinking has always been a big undercurrent (at least compared to the rest of the western world) in the hacker/computer science world, so doesnt have to come from anything LW related.

so there’s a whole network of specifically Thiel-associated SF tech guys who are into particular churches

it’s above-baseline among the tpots (at least relative to other areas I’ve observed it)

Compared to the hacker baseline that is odd indeed.

looking for advice/suggestions:

anyone seen anything yet (uBlock ruleset, {tamper,grease}monkey scripts, etc) that can block the “talk to our prompt” widgets that have started showing up on too many fucking webpages? I’m getting sick of the things, and I haven’t really yet found an exhaustive list of this shit from which to build up a list

@froztbyte @dgerard you have an example of those?

of things I’ve found in the space that do address this somewhat includes this (a list of domains of either explicitly full of slop or heavily supporting slop)

brave supposedly has something as well but, well, it’s brave so it’s a non-starter

this is a now-archived project that maintained a list of chat widgets

regarding instances of widgets, off the top of my head some places where I’ve seen chat prompts unhelpfully placed: pluginboutique.com, hetzner.com, most aws doc and product pages (“Explainer”). I think hydro.run also had some trash popping up (I have a block for it), but can’t recall under which section

(DDG also pops some up constantly unless you have the cookies set, but that fails in fresh browser instances)

OAI announced their shiny new toy: DeepResearch (still waiting on DeeperSeek). A bot built off O3 which can crawl the web and synthesize information into expert level reports!

Noam is coming after you @dgerard, but don’t worry he thinks it’s fine. I’m sure his new bot is a reliable replacement for a decentralized repository of all human knowledge freely accessible to all. I’m sure this new system doesn’t fail in any embarrassing wa-

After posting multiple examples of the model failing to understand which player is on which team (if only this information was on some sort of Internet Encyclopedia, alas), Professional AI bully Colin continues: “I assume that in order to cure all disease, it will be necessary to discover and keep track of previously unknown facts about the world. The discovery of these facts might be a little bit analogous to NBA players getting traded from team to team, or aging into new roles. OpenAI’s “Deep Research” agent thinks that Harrison Barnes (who is no longer on the Sacramento Kings) is the Kings’ best choice to guard LeBron James because he guarded LeBron in the finals ten years ago. It’s not well-equipped to reason about a changing world… But if it can’t even deal with these super well-behaved easy facts when they change over time, you want me to believe that it can keep track of the state of the system of facts which makes up our collective knowledge about how to cure all diseases?”

xcancel link if anyone wants to see some more glorious failure cases:

https://xcancel.com/colin_fraser/status/1886506507157585978#m

it’s amazing how intensely these assholes want to end Wikipedia and pollute all other community information sources beyond repair. it feels like it’s all part of the same strategy:

- without consent, scrape all the information from an online source as destructively as you can

- if possible, render that source useless by polluting it with LLM crap

- otherwise, shut it down through political means

- now that you’ve pulled the ladder up behind you, replace that information source with your garbage LLM and start rentseeking harder than Netflix

“Aligning people is hard too” a thing that only a literal sociopath would think and only a special kind of sociopath would utter publicly

Remember when Scott wrote the ‘dont talk like robots ya nerds’ article? Good times.

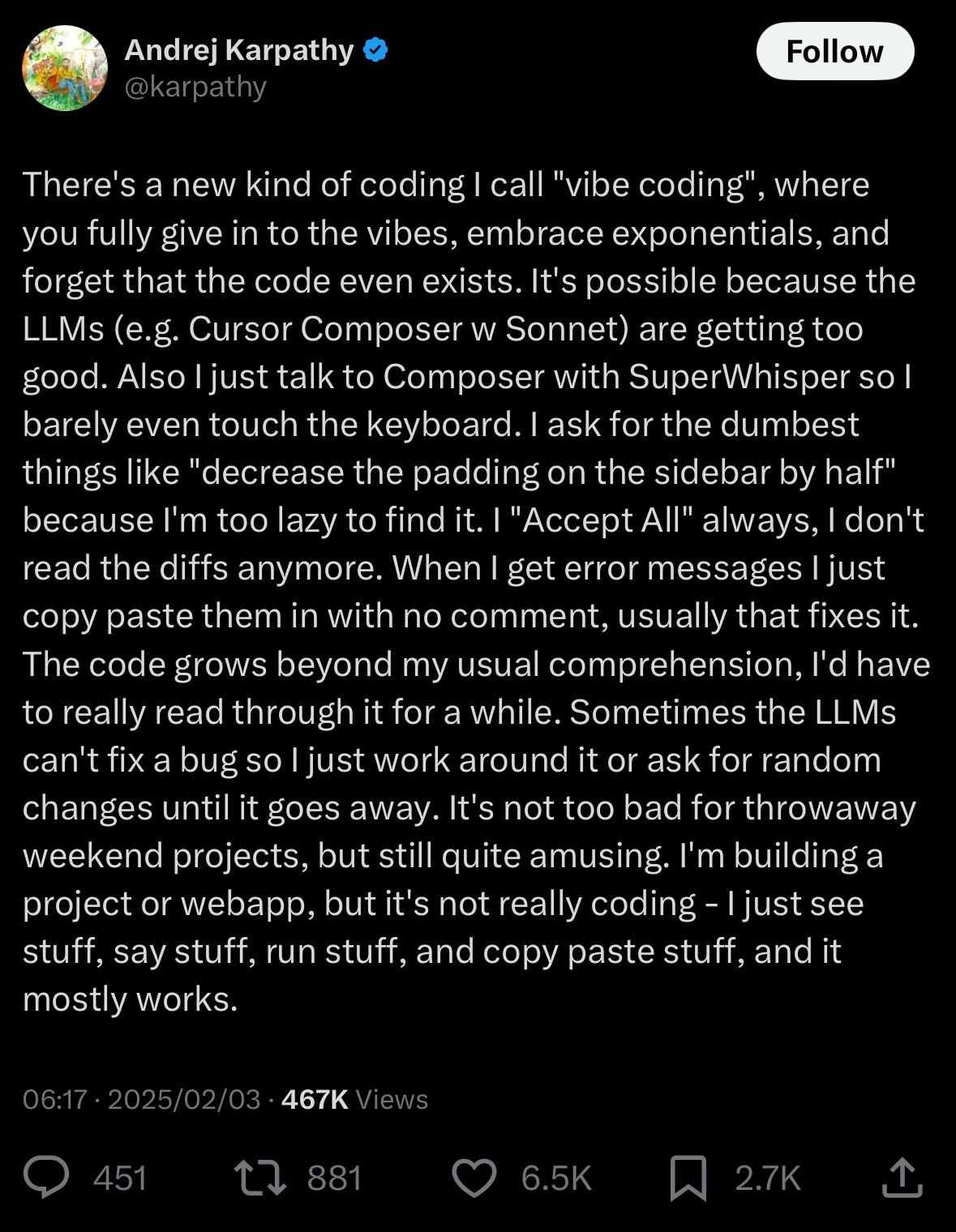

in which karpathy goes “eh, fuckit”:

karpathy tweet text

There’s a new kind of coding I call “vibe coding”, where you fully give in to the vibes, embrace exponentials, and forget that the code even exists. It’s possible because the LLMs (e.g. Cursor Composer w Sonnet) are getting too good. Also I just talk to Composer with SuperWhisper so I barely even touch the keyboard. I ask for the dumbest things like “decrease the padding on the sidebar by half” because I’m too lazy to find it. I “Accept All” always, I don’t read the diffs anymore. When I get error messages I just copy paste them in with no comment, usually that fixes it. The code grows beyond my usual comprehension, I’d have to really read through it for a while. Sometimes the LLMs can’t fix a bug so I just work around it or ask for random changes until it goes away. It’s not too bad for throwaway weekend projects, but still quite amusing. I’m building a project or webapp, but it’s not really coding - I just see stuff, say stuff, run stuff, and copy paste stuff, and it mostly works.

skipping past the implicit assumption of “well, just have a bunch of money to be able to keep throwing the autoplag at the wall until something sticks”, the admissions of not giving a single fuck about anything, and the straight and plain “well, it often just doesn’t work like we keep promising it does”, imagine being this fucking incurious and void of joy

I’m left wondering if this bastard is running through the stages of grief (at being thrown out), because this sure as fuck reads like despair to me

I ask for the dumbest things like “decrease the padding on the sidebar by half” because I’m too lazy to find it

this is so much slower (in both keystrokes and raw time, not to mention needing to re-prompt) and much more expensive than just going into the fucking CSS and pressing the 3 buttons needed to change the padding for that selector, and the only reason why this would ever be hard is because they’re knee deep in LLM generated slop and they can’t find fucking anything in there. what a fucking infuriating way to interact with a machine.

come on don’t you like waiting 1s+ for every single action you ever want to take? it’s the hot new thing

React has entered the chat (don’t try talking to it yet though, it has to “asynchronously” load every individual UI element in the jankiest way possible)

This reinforces my judgment that the ultimate customers for code-completion models are people who don’t actually want to be writing code in the first place.

So after billions of investment, and gigawatt-hours of energy, it’s now “not too bad for throwaway weekend projects”. Wow, great. Let’s fire all the programmers already!

Apart from whatever the fuck that process is, it is not engineering.

And to think that people hated on Visual Basic once… in comparison to this stuff, it was the most solid of solid foundations.

that’d be easily terawatt-hours i think. just musk’s server farm’s generators are 100MW, and draw who knows how much from grid, and if it runs for a year and two months at that power that’s 1TWh. and there’s google, ms, amazon, whatever chinese are cooking,

So after billions of investment, and gigawatt-hours of energy, it’s now

on the level of a ‘build your own website’ site. They are the wysiwyg users now.

The blue check reaction to the totally cracked treasury zoomers showcases a complete rejection of the importance of domain knowledge. It’s 10x software engineer syndrome metastasized.

They’re saying that the ice cream hair kid - who has never worked on a real world system because he’s STILL IN COLLEGE - is going to do us proud because he translated a greek scroll in high school? Good for him, but so what? Ben Carson split babies in half like Solomon and he’s still a moron.

I wonder if one of the reasons they’re so young is that’s the age you’d have to be to not realize in how much legal trouble they might be putting themselves in. (Bar an eventual pardon from Trump.)

Also the age where you are easily impressed by a supposed genius, actual billionaire, ‘meme lord’ who sort of speaks your language (but due to your age you have not noticed only in the most shallow way), who showers you with attention. While also filled with the righteous fury of wanting to act on your ideology.

Don’t worry they will use ChatGPT to learn all the COBOL they need.

(One of my pet peeves in software is bad documentation (always fun when the comments and the documentation contradict, and after an hour of digging through the email archives you discover both are wrong, and nobody every cared to update either, as the email was enough), but lol if that is what saves the US gov (and look at how bad it has gotten, I’m rooting for the US gov now. If I ever want to be seen as worthwhile I will try to hire Musk to get mad at me, it worked for Zuck (a little bit))).

Don’t worry they will use ChatGPT to learn all the COBOL they need.

Oh why would they. They will just rewrite it from scratch in a weekend, right? And reading the original code would only pollute the mind with historic knowledge, and that stands in the way of disruptive innovation.

(btw I appreciate your correctly nested parentheses.)

(btw I appreciate your correctly nested parentheses.)

I once fucked those up and people got mad. (I kid, they pointed out I usually use them correctly). I mostly use parentheses to note that im going a bit offtrack, which keeps happening, it is a bad habit.

Myself I’ve learned to embrace the em dash—like so, with a special shoutout to John Green—and interleaving

( [ { } ] ). On mac and linux conveniently short-cutted to Option+Shift+‘-’, windows is a much less satisfying Alt+0150 without third party tools like AutoHotKey.I write

--for “en dash” andfor “em dash” and I end up looking like an asshole in emails a lot. However, they appear to work correctly here:en: --en: –

em: ---em: —Also, Gnome Characters can be useful, though I have been looking for a good replacement.

I like to use

--in plain text too! LaTeX user high five…?Although I read somewhere recently that some people consider usage of em-dashes as a sign of AI-generated text. Oh well.

this feels like a pattern too — so many naturally divergent or non-standard (from the perspective of a white American who thinks they own the English language) elements of writing are getting nonsensically trashjacketed as telltale signs that a text must be generated by an LLM. see also paully g trashjacketing “delve” for purely racist reasons and the authors of the Nix open letter having the accusation of LLM use leveled at them by people who didn’t read the letter and didn’t want anyone else to either.

LaTeX user high five…?

I need to finish crying over all my underfull hboxes, can we high-five in the evening?

Thank you for implicitly reminding me to take my ADD meds.

balancing parentheses is why I draft all my comments in emacs

Somebody pointed out that HN’s management is partially to blame for the situation in general, on HN. Copying their comment here because it’s the sort of thing Dan might blank:

but I don’t want to get hellbanned by dang.

Who gives a fuck about HN. Consider the notion that dang is, in fact, partially to blame for this entire fiasco. He runs an easy-to-propagandize platform due how much control of information is exerted by upvotes/downvotes and unchecked flagging. It’s caused a very noticeable shift over the past decade among tech/SV/hacker voices – the dogmatic following of anything that Musk or Thiel shit out or say, this community laps it up without hesitation. Users on HN learn what sentiment on a given topic is rewarded and repeat it in exchange for upvotes.

I look forward to all of it burning down so we can, collectively, learn our lessons and realize that building platforms where discourse itself is gamified (hn, twitter, facebook, and reddit) is exactly what led us down this path today.

wow the sanewashing of trump in those threads is insane

Somewhat related I was thinking about how different this blog post from a DOGE “employee” reads during Elon Musks coup attempt: https://vinay.sh/i-am-rich-and-have-no-idea-what-to-do-with-my-life/ – it was discussed here but no one really knew what was coming at the time.

There’s also a youtube video which has been popping off on social media over the last week and is a gentle introduction to techno-fascists for the general public.

d’ya…d’ya think they’ll make it all the way along the path, to the realization?

no

The ones who walk towards Omelas. (And Omelas is fine actually, you damn hippy).

“Why Don’t We Just Kill the Kid In the Omelas Hole” by Isabel J. Kim

Finally it turns out torturing the kid was unnecessary and spreading out the suffering would have worked fine. All Omelas had to do was raise their income tax a little bit.

Penny Arcade weighs in on deepseek distilling chatgpt (or whatever actually the deal is):

“Wow, this Penny Arcade comic featuring toxic yaoi of submissive Sam Altman is lowkey kinda hot” is a sentence neither I nor any LLM, Markov chain or monkey on a typewriter could have predicted but now exists.