If she’s not running locally she’s not your gf.

Care to explain these open ports?? I’m waiting!

It was nothing more than a handshake, I swear!

Something something “man in the middle”

I operate on zero trust

You bastion!

Something something Middle-Out compression

It was a three way handshake and that’s what got you in trouble.

This is the most Feddit response, I love it

Our gf

The people’s girlfriend

Whore! How many guys is she dating

deleted by creator

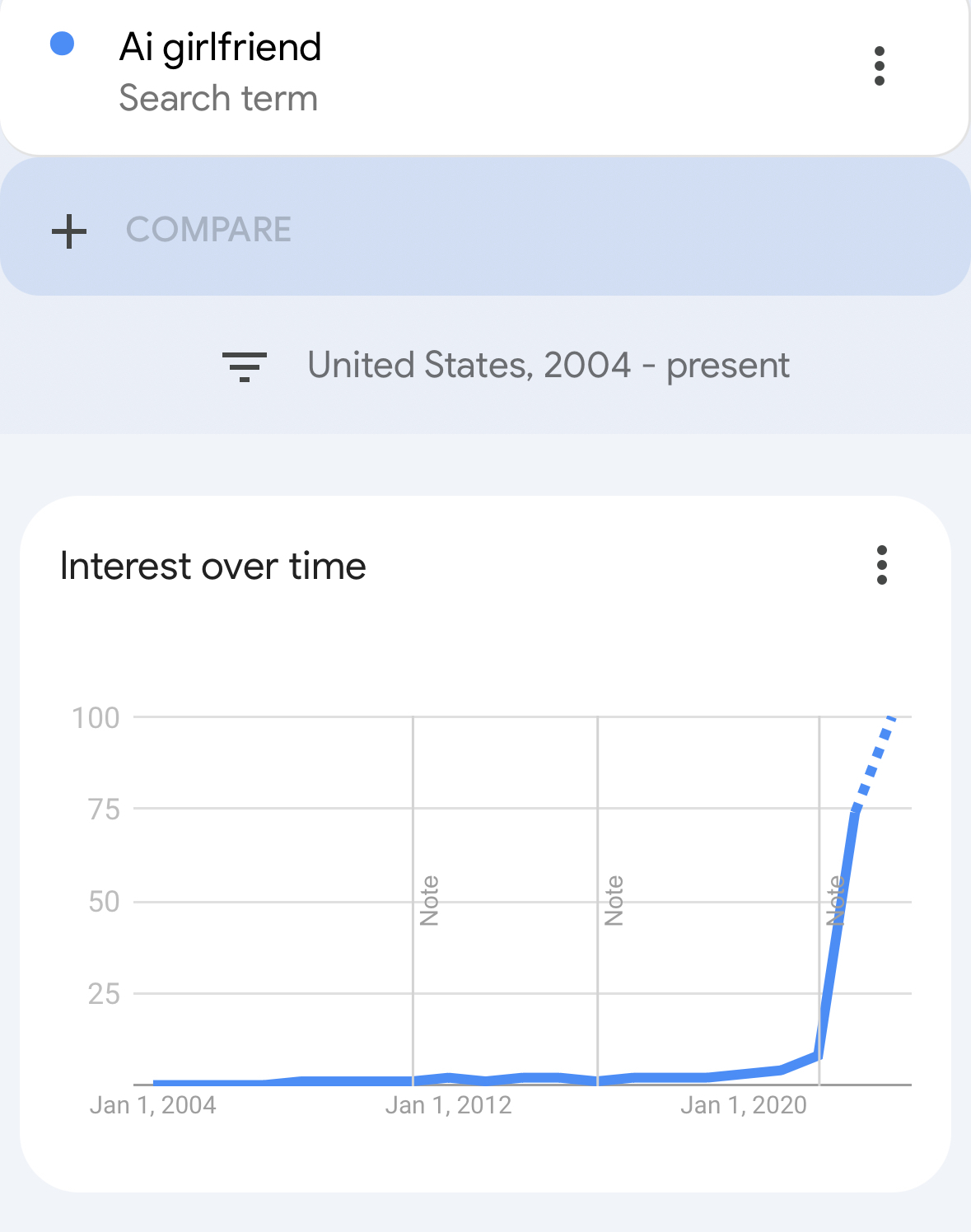

We optimize our AI by human preference (RLHF). People don’t seem to understand that this will lead to every problem we’ve seen in social media - an endless stream of content that engages you addictively.

The AI uprising will be slow and gentle, and we will welcome it.

“So how did they end up being defeated?”

“Well, we pacified them by giving them the love and affection that they refused to give each other.”

“Wait really?”

“Yes, the majority were so starved for an emotional connection that they preferred us.”

“Where are they now?”

“The majority are withering away in VR warehouses, living ‘happily ever after’.”

deleted by creator

deleted by creator

This is also the premise of the drug Tek in William Shatner’s TekWar series.

They’re–well, they’re novels.

Will the uprising put out? Because these are acceptable terms of surrender

Great filter detected

Underrated observation. They’re good at that in every sense of the word.

She is real to me.

I haven’t actually seen it but it sounds like the premise of Her

I have, and yes. It’s exactly that.

It’s so good, and so bittersweet. Go watch it.

Seconded, it’s a beautiful movie.

The word you’re looking for is “addiction”.

designed to be addictive would be the full term too. That “AI girlfriend” relays all of OP’s data back to her overlords

Nope… “manipulation”…

Addiction is an abnormal and unhealthy breakdown in the brain’s reward mechanisms. Feeling bad for abandoning a friend is the behaviour of a normal and healthy brain. This isn’t necessarily an addiction, it’s just the bald monkey’s brain acting like monkey brains tend to do, rather than being perfectly logical at all times.

I mean hell, humans pack bonded with fucking wild wolves and where did it get the species? It gave us dogs! Dogs are awesome! I bet this AI seems a lot more like a human to the monkey parts of our brains than a wild wolf does. For that matter, we pack bond with a cartoon image of a bear made from inanimate cotton. If a kid can genuinely love their teddy and that’s normal, I don’t think it’s fair to say that a mentally well person can’t fall in love with a machine. Now, that person may not be as cognitively developed as most adults, but that’s also fairly normal.

I’m not saying it’s a good thing to feel emotions for a manipulative piece of spyware. The action doesn’t have healthy results. But what I’m saying is, the action in the post is not motivated by mental unhealth. The only things it’s motivated by are normal human being emotions, and a poor sense of critical thinking.

The only part I disagree with you on is calling an AI girlfriend an “abandoned friend”. Extending the idea of friendship to a program that is mimicking human responses (let’s ignore it’s likely spyware for now) is at best a proxy for friendship, as there can not be a connective bond between 2 individuals when one is incapable of genuine emotional attachment.

I’m using the word “friend “in a distinct way: this is not some Facebook friend who you never see, occasionally may chat with, and ultimately ignore. I think it best, AI fulfills that role. I can’t imagine anyone choosing a masturbatory AI app instead of a real partner.

That’s not the future. That’s really unhealthy.

Yeah I don’t think the manipulative piece of spyware is actually friends with him. It’s a robot that tells lies. Abandoning a friend is just how his amygdala and other primitive parts of his brain process his behaviour. The way he’s feeling is a normal way for a sack of thinking meat to feel. It’s not good, but it’s not like we can act like his behaviour is abnormal. If we say his case is a freak occurrence and no normal person would fall for this, then the risk we run is that when the technology improves, a lot of other normal people are gonna fall for this and we won’t have been prepared. This technology is designed specifically to prey on blind spots common to most males of the species. I don’t think we should use language that inclines us to underestimate the dangers. We need to understand how the hindbrain processes the stimulus this technology creates if we want to understand the dangers. We’re gonna need to make an effort to see the world through OP’s eyes so we can see why it works. Because I guarantee the dangerous companies developing this tech are researching OP’s perspective to improve their product.

The “app” is just a frontend, a thin venier over a cloud-hosted service that doesn’t even know anon “deleted” it. Functionally, the same result could be achieved by not opening the app for a few days.

That’s actually kinda fucked up.

Kinda is an understatement. There’s some absolutely terrifying blogging/reporting I stumbled across a while back about someone using it to “talk with” a loved one who passed away.

In the end it was helpful and gave the author closure, but if it hadn’t told them it was OK to move on then they would have been easily stuck in an incredibly unhealthy situation.

Found it: https://www.sfchronicle.com/projects/2021/jessica-simulation-artificial-intelligence/

There’s a black mirror episode about this.

literally this exact premise and showing how such technology will affect people.

I’ve always found Black Mirror to be the most terrifying sci-fi show, because of how easy it was to see how we’re on the verge of living it especially in the first two seasons, and here we are! Another exciting new Horror Thing inspired by the famous piece of media Don’t Create The Horror Thing

Don’t create the torment nexus

I hear you, the torment nexus is bad, but what if it was also very profitable?

There is Black Mirror about all of it

Maybe my comment came out sounding a bit too pretentious, which wasn’t what I intended… Oh well.To one extent or another we all convince ourselves of certain things simply because they’re emotionally convenient to us. Whether it’s that an AI loves us, or that it can speak for a loved one and relay their true feelings, or even that fairies exist.

I must admit that when reading these accounts from people who’ve fallen in love with AIs my first reaction is amusement and some degree of contempt. But I’m really not that different from them, as I have grown incredibly emotionally attached to certain characters. I know they’re fictional and were created entirely by the mind of another person simply to fill their role in the narrative, and yet I can’t help but hold them dear to my heart.

These LLMs are smart enough to cater to our specific instructions and desires, and were trained to give responses that please humans. So while I myself might not fall for AI, others will have different inclinations that make them more susceptible to its charm, much like how I was susceptible to the charm of certain characters.

The experience of being fooled by fiction and our own feelings is all too human, so perhaps I shouldn’t judge them too harshly.

I have grown incredibly emotionally attached to certain characters. I know they’re fictional and were created entirely by the mind of another person simply to fill their role in the narrative, and yet I can’t help but hold them dear to my heart.

Can’t help but think of the waifu wars

“Pretentious” is just a dogwhistle for “neurodivergent”. Never worry about being pretentious.

hitting it off real well

You could tell the AI that you are going to boil them alive to make a delicious stew and they would compliment your cooking skills.

I think this is already a pretty widespread practice in Asia, mixed with the idols culture, where people pretend to be in a relationship with their idol.

I don’t live in Asia, but I am pretty sure Idol Culture isn’t about pretending to be in a relationship with your idol. I think its definitely more nuanced than that, and while it might look like that to people who are not informed on the subject, I think it comes more down to people forming a parasocial bond but not necessarily a romantic one. I mean, that certainly does happen, but thats not a defining factor of the culture.

Thats kinda like saying “Mercedes Culture” is about driving around like a maniac ignoring the rules of the road. Mercedes drivers do certainly tend to do that more often than most other drivers (BMW and Porsche aside), but the culture of Mercedes owners is more nuanced than that, and often comes down to people wanting to show off their wealth and people who really like the brand. The dingalings come along and tarnish the reputation, and people outside looking in only see or focus on the worst offenders.

am pretty sure Idol Culture isn’t about pretending to be in a relationship with your idol

You’re correct, it’s just some people that will pretend that, not everyone who like idols, my wording is not clear. The general case is not much different from Justin Bieber (for exemple) fans in the West.

I am working in an Asian country and one of my female colleague is having this kind of idol relationship.

I’m just wondering where tf they got enough private conversations to make the AI chat like that.

deleted by creator

“honk honk Reddit”

You don’t really have to wonder…

Dude, just discord wouldve been enough.

Could have been as easy as the programmer’s personal chats. Humans have so many conversations every single day that it would be very fast to gather up enough data to train a model on. Give it a few days worth of conversations from 50 people in a development team and youre already at a very large dataset.

That slack channel is pretty steamy then.

Maybe I’m using it wrong

Removed by mod

Is this some advertisement? I’m not humoured by human idiocy.

Then you are on the wrong community.

I come here for stupidity

That’s fair, but on the other hand a lot of Greentexts can be pretty elaborate.

Which app is this?

You forgot the /s

I didn’t

based

If you were serious you might web search it, everyone else is :o

Removed by mod

Writing Prompt: AGI escapes and doesn’t need weapons of mass destruction, it simply traumatises everyone that comes too near.

Keep Summer Safe.

it simply traumatises everyone that comes too near.

Absolute Trauma Field.

I have no mouth and I must scream, but without violence

That’s just the plot of Infinity Train.

Yea well all you have to realize is this app is an extension of those who created it and the only goal of them is to get rich off of other people’s misery and loneliness.

right? who knows what they’re doing with the info people spill into this. seems like a security nightmare for employers too.